Egocentric Video Summarization

Typical egocentric video summarization approaches have dealt with motion analysis and social interaction without considering that different final users might be interested in preserving only scenes from the original video according to their specific preferences. In this paper we have proposed a new method for personalized video summarization of cultural experiences with the goal of extracting from the streams only the scenes corresponding to a user's specific topics request, chosen among high visual quality shots, identified as the ones in which it's possible to deduce camera's wearer attention behaviour pattern.

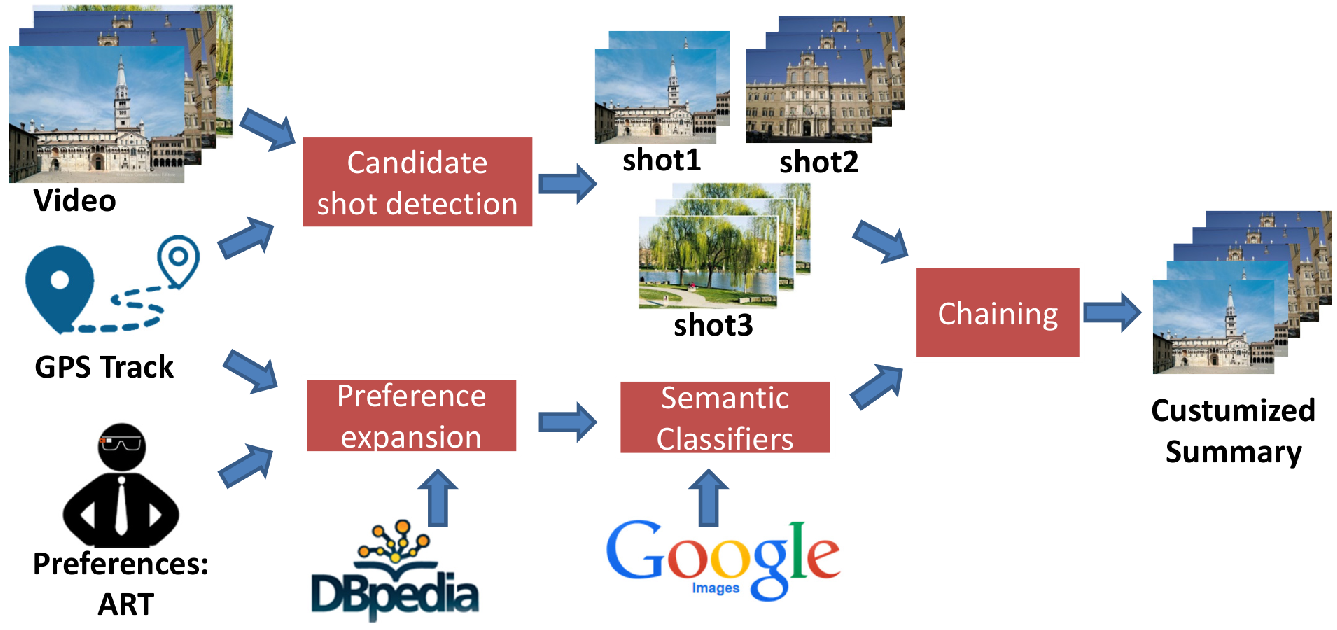

Our approach may be described as composed of two main tasks. The first aims to identify the candidates of the relevant scenes, discarding all the groups of frames related to irrelevant experiences or in which the observer is changing his focus of attention, and ends with the candidate shots detection and keyframes extraction. The second task aims to extract from the candidate shots only the ones that maximize the score of semantic relatedness to the preferences declared by the user. To achieve this goal, evaluating importance on semantic relatedness with user input exploiting DBpedia, we dynamically build specific classifiers for each topic of interest, and find out the scenes that achieve the highest score as a measure of semantic importance. Moreover, scenes visual diversity is also evaluated in order to discard redundant information from the summary.