European Lighthouse on Secure and Safe AI

About ELSA

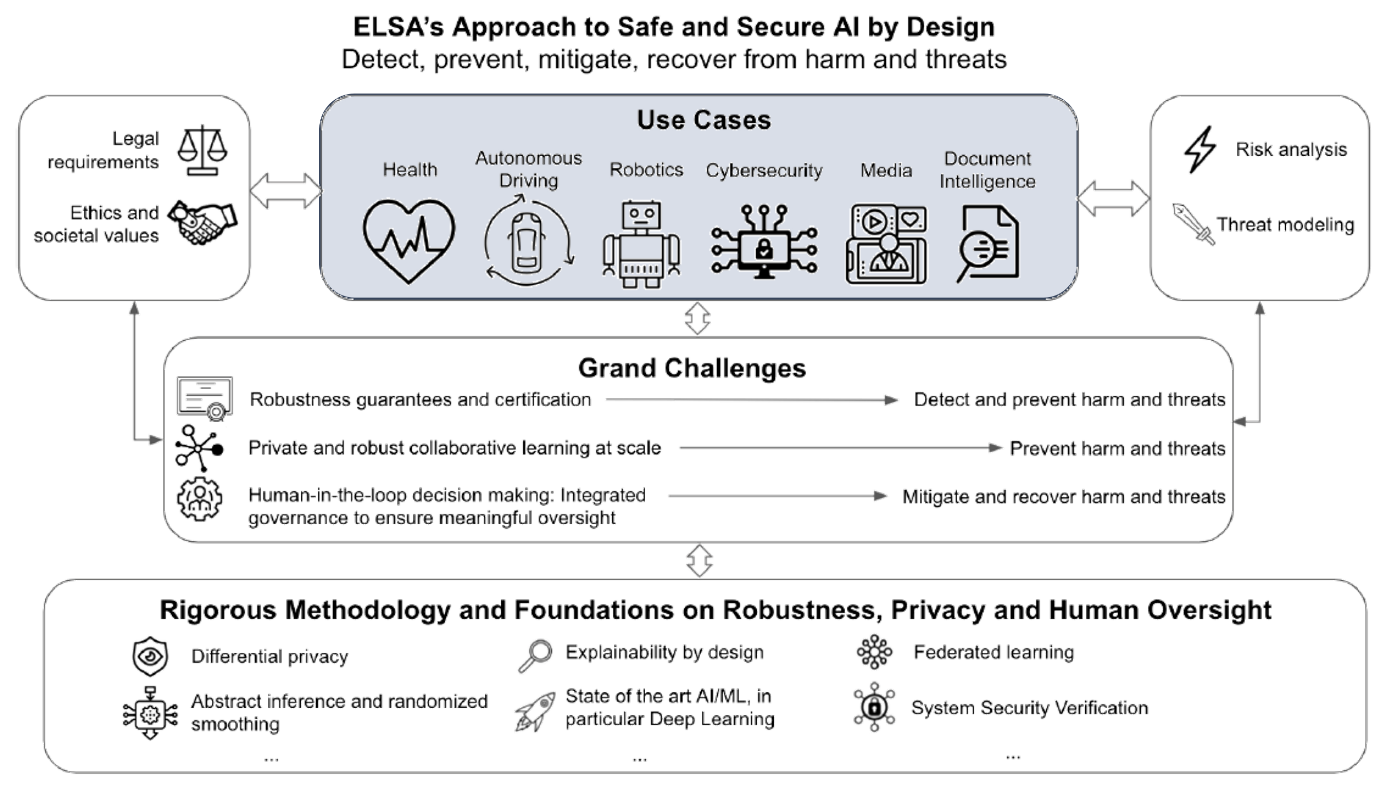

The European Lighthouse on Safe and Secure AI (ELSA) aspires to define the research agendas and lead research efforts in three important areas of Artificial Intelligence: technical robustness and safety, privacy, and human agency and oversight. These topics address core European values, and it is of strategic importance that Europe takes the lead in this research effort. Progressing in these areas of research is key to enable AI researchers and practitioners to design systems able to detect, prevent, mitigate and recover from harm and threats.

To advance in these research areas, important research questions must be addressed: how to define robustness guarantees in connection with certification of AI systems, how to scale up private and robust collaborative learning to real-life scenarios, and how to efficiently introduce human-in-the-loop decision making in AI systems. These research questions form the basis for the three Grand Challenges that ELSA puts forward.

In order to ensure real-life impact, the ELSA Grand Challenges, which address basic research, will be coupled with six Use Cases that define real-life scenarios where these research results are dearly needed and have the potential to create widespread commercial and social impact. The ELSA Use Cases will be focused on Health, Autonomous Driving, Robotics, Multimedia, Cybersecurity and Document Intelligence.

Planned Activities in the Multimedia use case

Machine-generated images are becoming more and more popular in the digital world, thanks to the spread of Deep Learning models that can generate visual data like Generative Adversarial Networks, and Diffusion Models. While image generation tools can be employed for lawful goals (e.g., to assist content creators, generate simulated datasets, or enable multi-modal interactive applications), there is a growing concern that they might also be used for illegal and malicious purposes, such as the forgery of natural images, the generation of images in support of fake news, misogyny or revenge porn. While the results obtained in the past few years contained artefacts which made generated images easily recognizable, today’s results are way less recognizable from a pure perceptual point of view. In this context, assessing the authenticity of fake images becomes a fundamental goal for security and for guaranteeing a degree of trustworthiness of AI algorithms. There is a growing need, therefore, to develop automated methods which can assess the authenticity of images (and, in general, multimodal content), and which can follow the constant evolution of generative models, which become more realistic over time.

The ELSA Use Case on Multimedia focuses on the development of benchmarks and tools for Fake data Understanding and Detection, with the final goal of protecting from visual disinformation and misuse of generated images, and to monitor the progress of existing and proposed solutions for detection. It will investigate novel ways of understanding and detecting fake data, through new machine learning approaches capable of mixing syntactic and perceptive analysis. Also, the Use Case promotes the creation of a competition on deepfake detection which is connected to the ELSA grand challenge of “Human in the loop decision making”. This will monitor and evaluate the development of algorithms for deep fake detection, in terms of efficacy, explainability and human oversight, by enabling domain experts to validate and improve results in a human-in-the-loop fashion. The Use Case will be connected to existing initiatives and will include the creation of new datasets for the aforementioned topics.

The collection and generation of data is a crucial step for the development of the benchmark. We will leverage existing datasets for deep fake detection, and generate new data as part of the Use Case. A first result in this direction is the COCOFake dataset, which has been generated by UNIMORE leveraging the CINECA supercomputing facilities. The dataset consists of more than 1.2M images generated using Stable Diffusion v1.4 and v2.0, using textual prompts coming from the COCO dataset for image captioning. As such, it contains clusters of five generated images sharing the same semantics and generated from five different textual prompts. In comparison with existing datasets for deep fake detection, it features more diversity, uniform coverage of semantic classes, and can easily be expanded to a larger scale.

ELSA Multimedia Benchmark - Track 1 opens

Join our thrilling competition of deepfake detection and put your skills to the test. As the rise of deepfake technology poses unprecedented challenges, we invite individuals and teams from all backgrounds to showcase their expertise in identifying and debunking manipulated media. The first track of the competition is open! It consists of detecting fully generated images: the binary classification task involves distinguishing between fake images and real images using machine learning and deep learning-based approaches. The competition will be deployed in periodical evaluation rounds and different versions of the dataset will be released progressively to improve the quality, quantity, and type of image generation to provide a benchmark that is as representative as possible of image manipulation scenarios within the media.

More details about the Multimedia track are available here.

ELSA D3 Benchmark - New training dataset released

The current deepfake detection datasets lack diversity in terms of image generators and are insufficient in terms of quantity. To address this limitation, we have developed and released a new dataset named the Diffusion-generated Deepfake Detection (D3) dataset. This dataset comprises almost 2.3 million records and 11.5 million images. Each record includes a prompt, a genuine image, and four images produced by various generators. Both prompts and authentic images are sourced from LAION-400M, while the fake images are generated using different text-to-image generators. This dataset aims to facilitate the training of deepfake detection methods from the ground up.

More details about the D3 training dataset are available here.

Stay tuned for the release of a new test set on the ELSA platform.

Publications

| 1 |

Sarto, Sara; Cornia, Marcella; Baraldi, Lorenzo; Nicolosi, Alessandro; Cucchiara, Rita

"Towards Retrieval-Augmented Architectures for Image Captioning"

ACM TRANSACTIONS ON MULTIMEDIA COMPUTING, COMMUNICATIONS AND APPLICATIONS,

vol. 20,

pp. 1

-22

,

2024

| DOI: 10.1145/3663667

Journal

|

| 2 |

Amoroso, Roberto; Morelli, Davide; Cornia, Marcella; Baraldi, Lorenzo; Del Bimbo, Alberto; Cucchiara, Rita

"Parents and Children: Distinguishing Multimodal DeepFakes from Natural Images"

ACM TRANSACTIONS ON MULTIMEDIA COMPUTING, COMMUNICATIONS AND APPLICATIONS,

vol. 21,

pp. 1

-22

,

2024

| DOI: 10.1145/3665497

Journal

|

| 3 |

Sarto, Sara; Barraco, Manuele; Cornia, Marcella; Baraldi, Lorenzo; Cucchiara, Rita

"Positive-Augmented Contrastive Learning for Image and Video Captioning Evaluation"

Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition,

vol. 2023,

Vancouver, can,

pp. 6914

-6924

,

Jun 18-22 2023,

2023

| DOI: 10.1109/CVPR52729.2023.00668

Conference

|

| 4 |

Betti, Federico; Staiano, Jacopo; Baraldi, Lorenzo; Baraldi, Lorenzo; Cucchiara, Rita; Sebe, Nicu

"Let's ViCE! Mimicking Human Cognitive Behavior in Image Generation Evaluation"

Proceedings of the 31st ACM International Conference on Multimedia - MM 2023,

Ottawa,

pp. 9306

-9312

,

October 29 - November 3, 2023,

2023

| DOI: 10.1145/3581783.3612706

Conference

|

| 5 |

Caffagni, Davide; Barraco, Manuele; Cornia, Marcella; Baraldi, Lorenzo; Cucchiara, Rita

"SynthCap: Augmenting Transformers with Synthetic Data for Image Captioning"

Proceedings of the 22nd International Conference on Image Analysis and Processing,

vol. 14233,

Udine, Italy,

pp. 112

-123

,

September 11-15, 2023,

2023

| DOI: 10.1007/978-3-031-43148-7_10

Conference

|

| 6 |

Cocchi, Federico; Baraldi, Lorenzo; Poppi, Samuele; Cornia, Marcella; Baraldi, Lorenzo; Cucchiara, Rita

"Unveiling the Impact of Image Transformations on Deepfake Detection: An Experimental Analysis"

IMAGE ANALYSIS AND PROCESSING, ICIAP 2023, PT II,

vol. 14234,

Udine, Italy,

pp. 345

-356

,

September 11-15, 2023,

2023

| DOI: 10.1007/978-3-031-43153-1_29

Conference

|

Project Info