AImageLab datasets

|

Diffusion-generated Deepfake Detection dataset (D3)Keywords: deepfake detection, image generation |

|

ELSA Deepfake benchmarkKeywords: deepfake detection, image generation |

|

CarPatchCarPatch, a novel synthetic benchmark of vehicles. In addition to a set of images annotated with their intrinsic and extrinsic camera parameters, the corresponding depth maps and semantic segmentation masks have been generated for each view. Keywords: Synthetic vehicle dataset, NeRF, Volumetric rendering |

|

INSECTT-SETA public Tranport Anomaly datasetLong, untrimmed real-world surveillance videos with 11 realistic anomalies recorded with a series of CCTV cameras placed inside SETA buses. The datset has been acquired inside the INSECTT projcet and comprises: up to 5 cameras with multiple angles; 31 hours of video; divided in 182 sequence. Keywords: Anomaly Detection, HBU, Public Transport |

|

ArtGallery3D (AG3D)ArtGallery3D (AG3D) is a 3D model of a museum for embodied exploration and navigation presenting unique features when compared to flats and offices contained in traditional embodied datasets. First, the dimension of the rooms drastically increases, and the same goes for the size of the building itself. In our 3D model, some rooms are as big as 20×15 meters, while the floor hosting the art gallery spans a total of 2,000 square meters. However, dimensions are not the only difference with current available 3D spaces. As a second factor, the presented gallery is incredibly rich in visual features, offering multiple paintings, sculptures, and rare objects of historical and artistic interest. The dataset contains also episodes for embodied exploration and navigation. For the navigation task, we annotate the position of most of the points of interest in the museum. Examples include numerous paintings, sculptures, and other relevant objects. Keywords: Visual Navigation, Embodied AI, Simulated Environments, Visual Exploration |

|

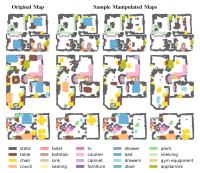

Spot the DifferenceSpot the Difference is a dataset of 2D semantics occupancy maps (SOMs) in which the objects can be added, removed, and rearranged while the area and the position of architectural elements do not change. In fact, using semantic annotations in Gibson and Matterport3D dataset we consider categories that have a high probability of being displaced or removed in the real world and ignore non-movable semantic categories such as fireplaces, columns, and stairs. The dataset contains also exploration episodes to train and evaluate an agent that is required to find the differences between the actual state of the map and an outdated version of it. Keywords: Visual Navigation, Embodied AI, Semantic Occupancy Maps, Visual Exploration |

|

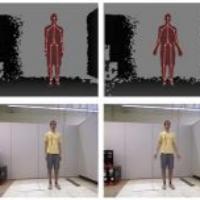

SimBaSimBa is a collection of synthetic and real data for the robot pose estimation task in collaborative environments. Among several collaborative robots, we chose the Rethink Baxter, which moves respectively to a set of random pick-n-place locations on a table, assuming realistic poses. The dataset contains RGB-D images with annotations for the 3D joints and the pick-n-place locations. The synthetic part contains over 350k annotated RGB-D images, while the real part contains over 20k annotated RGB-D frames. Keywords: Robot Pose Estimation, Synth/Real Dataset, RGB-D Data |

|

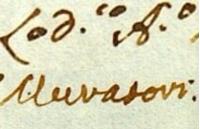

Ludovico Antonio Muratori (LAM)The Ludovico Antonio Muratori (LAM) dataset is the largest line-level HTR dataset to date and contains 25,823 lines from Italian ancient manuscripts edited by a single author over 60 years. The dataset comes in two configurations: a basic splitting and a date-based splitting which takes into account the age of the author. The first setting is intended to study HTR on ancient documents in Italian, while the second focuses on the ability of HTR systems to recognize text written by the same writer in time periods for which training data are not available. Keywords: Handwritten text Recognition, Digital Humanities |

|

Dress CodeDress Code is the the largest publicly available dataset for image-based virtual try-on. The dataset contains more than 50k high-resolution image pairs divided into three different categories (i.e. dresses, upper-body clothes, lower-body clothes). All images come from different catalogs of YOOX NET-A-PORTER. Keywords: virtual try-on, fashion |

|

MOTSynthMOTSynth is a huge dataset for pedestrian detection and tracking in urban scenarios created by exploiting the highly photorealistic video game Grand Theft Auto V developed by Rockstar North. We collected a set of 768 full-HD videos, one minute long, recorded at 25 fps. Keywords: people detection, tracking, re-identification, pose estimation, depth, segmentation, 3D, surveillance |

|

PREVUE SynthPREVUE SYNTH, child of MOTSynth, is a huge dataset for pedestrian detection and tracking in surveillance scenarios created by exploiting the highly photorealistic video game Grand Theft Auto V developed by Rockstar North. Keywords: pedestrian detection, tracking, surveillance, dataset |

|

MultiSFaceMultiSFace is a multi-modal dataset for the face recognition task. It consists of 32 different subjects at different distances, recorded with depth, infrared, thermal, and RGB sensors. It also contains a good coverage of accessories that occlude the face, such as eyeglasses, scarves, and hats. Keywords: face recognition, multi-sensor, depth maps, thermal, infrared, IR, RGB |

|

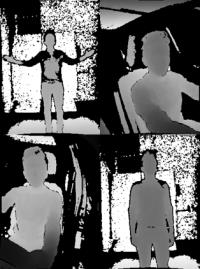

BaraccaBaracca is a dataset specifically collected for the anthropometric measurements estimation task on the human body. This dataset contains depth maps, infrared, thermal and RGB images, along with manually-collected human body measurements, such as BMI, height and weight. It has been published in the International Joint Conference on Biometrics (IJCB 2020). Keywords: Anthropometry, Depth Maps, Thermal, Infrared, RGB |

|

Briareo datasetWe propose a new dataset, called Briareo, specifically collected for the hand gesture recognition task in the automotive context. We focus on the acquisition of dynamic hand gestures, where each gesture is a combination of motion and one or more hand poses. Images have been collected from an innovative point of view: the acquisition devices are placed in the central tunnel between the driver and the passenger seats, orientated towards the car ceiling. In this way, visual occlusions produced by driver’s body can be mitigated. Keywords: Hand Gestures, Automotive, Infrared Images, Depth Maps, Hand Joints |

|

M-VAD NamesThe dataset consists of 84.6 hours of video from 92 Hollywood movies. For each movie, we provide the annotations of the characters' visual appearances, in the form of tracks of face bounding boxes, and the associations with the characters' textual mentions, when available. The released dataset contains more than 24k annotated video clips, including 63k visual tracks and 34k textual mentions, all associated with their character identities. Keywords: video captioning, naming, face identification |

|

AiC DatasetAiC (Attributes in Crowd) is a novel synthetic dataset for people attribute recognition in presence of strong occlusions created by exploiting the highly photorealistic video game Grand Theft Auto V developed by Rockstar North. It features 125,000 samples, all being a unique person, each of which is automatically labeled with information concerning visual attributes, as well as joint locations. Keywords: surveillance, attribute recognition, pose estimation, occlusion |

|

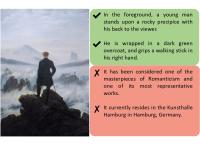

ArtpediaArtpedia contains a collection of 2,930 painting images, each associated to a variable number of textual descriptions. Each sentence is labelled either as a visual sentence or as a contextual sentence, if does not describe the visual content of the artwork. Overall, the dataset contains a total of 28,212 sentences, 9,173 labelled as visual sentences and the remaining 19,039 as contextual sentences. On average, each painting is associated with 3.1 visual and 6.5 contextual sentences. Keywords: cross-modal retrieval, visual-semantic models, cultural heritage |

|

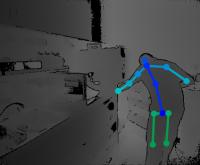

Watch-R-PatchWatch-R(efined)-Patch it's a more precise and accurate version of the public dataset Watch-N-Patch, which is composed by different actions from diffrent people acquired with Kinect One. The annotations in the original datasets are computed with the Kinect SDK, which is not reliable for generic actions in non-frontal position. We collect refined annotations using a quick and easy-to-use annotation tool, publicly released here. Hand-corrected joints gave us the opportunity to compare our result with real information. Keywords: Body Joints, Skeleton, Depth Maps |

|

TurmsWe aim to propose a new dataset, namely Turms, that contains infrared images of hands acquired placing an infrared camera -- a Leap Motion device -- that is able to provide high-quality and short-range images with a wide scene, thanks to its fish-eye lens. Keywords: Hand Detection, Hand Tracking, Automotive, Human Car Interaction |

|

JTA DatasetJTA is a synthetic large-scale dataset for human pose estimation and tracking in video acquired using the video game Grand Theft Auto 5 developed by Rockstar North. The recorded videos are chosen to contain a large amount of body pose appearance and scale variation, as well as body part occlusion and truncation. The videos also contain severe body motion, i.e., people occlude each other, re-appear after complete occlusion, vary in scale across the video, and also significantly change their body pose. The number of visible persons and body parts may also vary during the video. Furthermore, it provides a diversity of viewpoints including a rich variety of angles and camera movements. Keywords: pose estimation, tracking, re-identification, surveillance |

|

MotorMarkMotorMark is composed by more than 30k frames. A variety of subjects is guarantee (35 subjects in total). Keywords: facial landmark, depth images, automotive, landmarking |

|

Xdocs datasetXdocs is designed with the intention of extending to a much wider audience the possibility to access a variety of historical documents. To that purpose, a great amount of Italian historical birth certificates documents of the XIX century has been collected. Moreover, to test and evaluate the Xdocs tools (Word Spotting and Annotation), a huge collection of single word images has been collected and annotated.

Keywords: Handwritten historical documents, Italia Civil registries, Word Spotting, Word Annotation |

|

Surrounding Vehicles Awareness datasetIn order to collect data, we exploit Script Hook V library synthetic_scripthook, which allows using Grand Theft Auto V (GTAV) video game native functions. Keywords: automotive, gta, surrounding vehicles |

|

BBC Planet Earth DatasetThe BBC Planet Earth dataset contains ground truth shots and scene annotation for each of the 11 eposides of the BBC Planet Earth educational TV Series. Each shot and scene has been manually annotated and verified by a set of human experts. Moreover, being scene detection a subjective task, we collect scene annotations from 5 different people. Keywords: scene detection, shot detection |

|

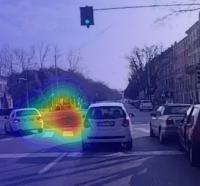

DR(eye)VEDR(eye)VE: a dataset for attention-based tasks with applications to autonomous and assisted driving. Keywords: driver attention, driver gaze, ADAS, assisted driving, autonomous driving |

|

PandoraPandora has been specifically created for head center localization, head pose and shoulder pose estimation and is inspired by the automotive context. A frontal fixed device acquires the upper body part of the subjects, simulating the point of view of camera placed inside the dashboard. Subjects also perform driving-like actions, such as grasping the steering wheel, looking to the rear-view or lateral mirrors, shifting gears and so on. Keywords: head pose, shoulder pose, automotive, cvpr |

|

Interactive Museum for Gesture RecognitionThe Interactive Museum dataset consists of 700 video sequences, all shot with a wearable camera, taken in a interactive exhibition room, in which paintings and artworks are projected over a wall in a virtual museum fashion. The camera is placed on the user’s head and captures a 800x450, 25 frames per second 24-bit RGB image sequence. Five different users perform seven hand gestures: like, dislike, point, ok, slide left to right, slide right to left and take a picture. Keywords: gesture recognition, cultural heritage |

|

Maramotti Dataset for Gesture RecogntionThis dataset contains videos taken in the Maramotti modern art museum, in which paintings, sculptures and objets d’art are exposed. The camera is placed on the user’s head and captures a 800x450, 25 frames per second image sequence. The Maramotti dataset contains 700 video sequences, recorded by five different persons, each performing seven hand gestures in front of different artworks: like, dislike, point, ok, slide left to right, slide right to left and take a picture. Some of them (like the point, ok, like and dislike gestures) are statical, others (like the two slide gestures) are dynamical. Keywords: gesture recognition, cultural heritage |

|

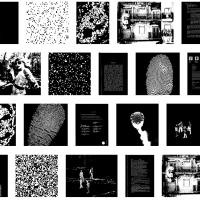

YACCLABFollowing a common practice in the literature, we built a dataset that includes both synthetic and real images. The provided dataset is suitable for a wide range of applications, ranging from document processing to survaillance, and features a significant variability in terms of resolution, image density and number of components. All images are provided in 1 bit per pixel PNG format, with 0 (black) being background and 1 (white) being foreground. Keywords: Connected Components Labeling, Binary Images. |

|

KinteractWe collect a new dataset which has been explicitly designed and created for Human Computer Interaction. Keywords: HCI, depth images, gestures |

|

Garment segmentation and color classification datasetDataset described in: Manfredi, Marco; Grana, Costantino; Calderara, Simone; Cucchiara, Rita "A complete system for garment segmentation and color classification" MACHINE VISION AND APPLICATIONS, vol. 25, pp. 955 -969 , 2014 | DOI: 10.1007/s00138-013-0580-3 The dataset is composed of 60204 images of different pieces of clothes and accessories from most famous fashion designers. Keywords: fashion, images, clothes |

|

3dPeS3DPeS (3D People Surveillance Dataset) is a surveillance dataset, designed mainly for people re-identification in multi camera systems with non-overlapped field of views, but also applicable to many other tasks, such as people detection, tracking, action analysis and trajectory analysis. Available data: the camera setting and the 3D environment reconstruction, the hundreds of recorded videos, the camera calibration parameters, the identity of the hundreds of people, detected more than one time by different point of view. It contains numerous video sequences taken from a real surveillance setup, composed by 8 different surveillance cameras, monitoring a section of the campus of the University of Modena and Reggio Emilia. Data were collected over the course of several days. Keywords: re-identification, Visor, 3dpes, calibration |

|

Visor - VIdeo Surveillance Online RepositoryViSOR contains a large set of multimedia data and the corresponding annotations. The repository has been conceived as a support tool for different research projects. Keywords: Visor; surveillance; 3dPes, Sarc3D |