Recognizing and Presenting the Storytelling Video Structure

Recognizing and Presenting the Storytelling Video Structure

with Deep Multimodal Networks

Abstract

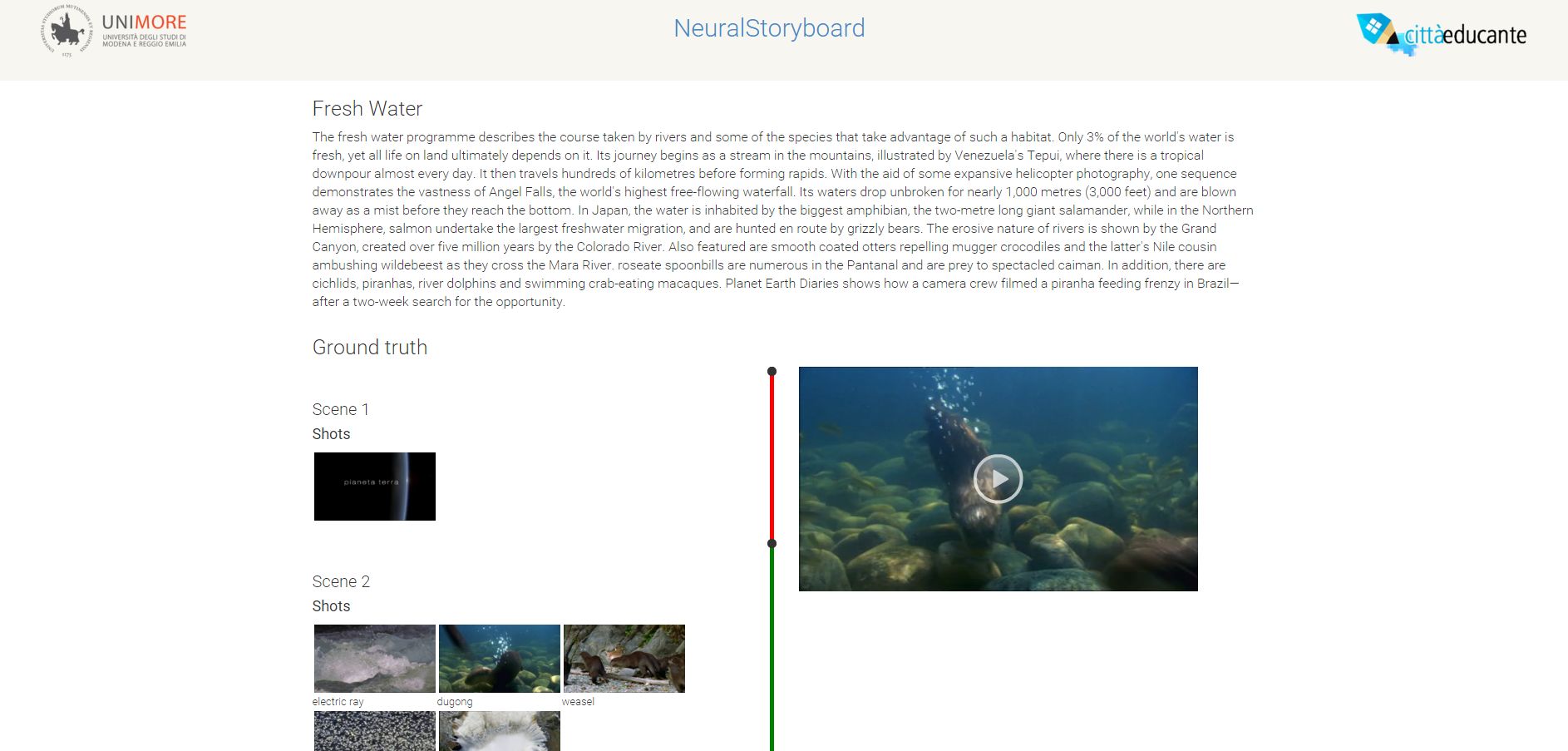

In this paper, we propose a novel scene detection algorithm which employs semantic, visual, textual and audio cues. We also show how the hierarchical decomposition of the storytelling video structure can improve retrieval results presentation with semantically and aesthetically effective thumbnails. Our method is built upon two advancements of the state of the art: 1) semantic feature extraction which builds video specific concept detectors; 2) multimodal feature embedding learning, that maps the feature vector of a shot to a space in which the Euclidean distance has task specific semantic properties. The proposed method is able to decompose the video in annotated temporal segments which allow for a query specific thumbnail extraction. Extensive experiments are performed on different data sets to demonstrate the effectiveness of our algorithm. An in-depth discussion on how to deal with the subjectivity of the task is conducted and a strategy to overcome the problem is suggested.Datasets

BBC Planet Earth Dataset

It contains the segmentation into scenes of eleven episodes from the BBC documentary series Planet Earth. Each episode is approximately 50 minutes long, and the whole dataset contains around 4900 shots and 670 scenes. Each video has been labelled by five different annotators.

Ally McBeal Dataset

The Ally McBeal dataset contains the temporal segmentation into scenes of four episodes of the first season of Ally McBeal. Overall, it consists of 2660 shots and 160 scenes.

Code

- Complete Python sources for the temporal segmentation algorithm. Pretrained models are included.

Python sources and models (1.42 GiB) - Dealing with subjectivity - Dynamic Programming approach for finding an agreement between different annotators

C++ source (3 KiB)