Data Acquistion and Benchmarking for Human Behavior Understanding and Action Analysis

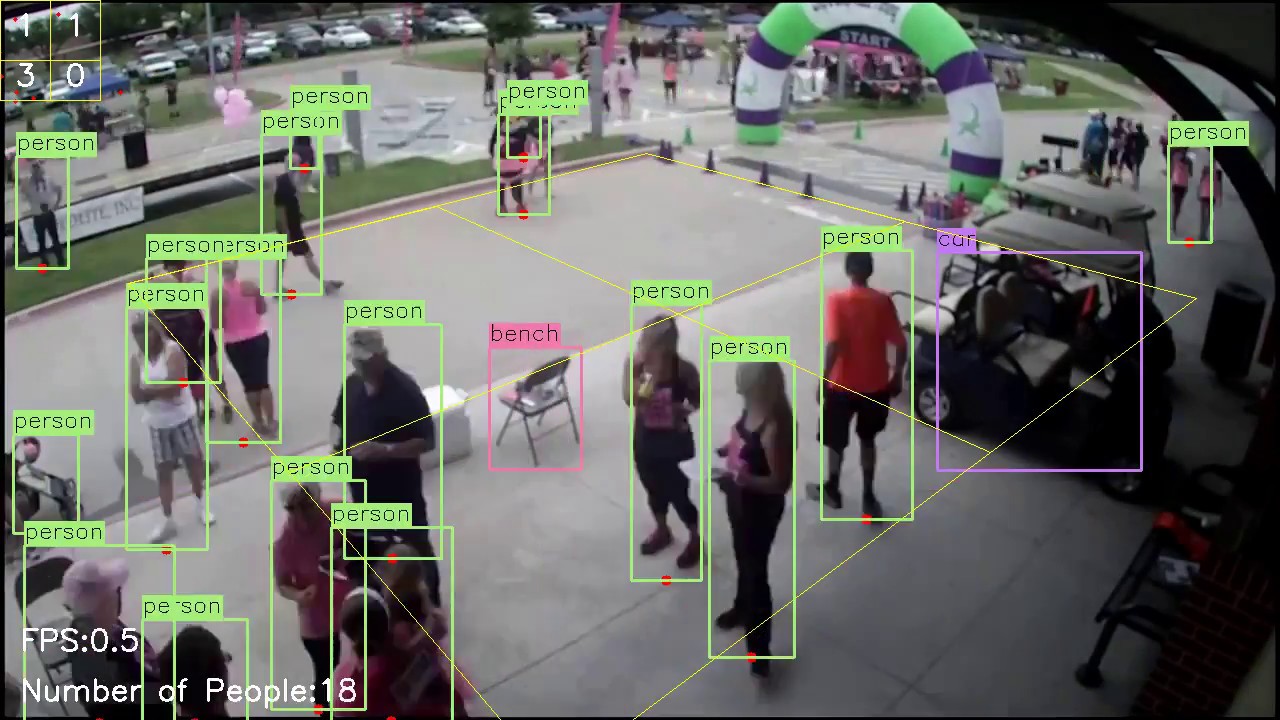

The DIVA program seeks to develop robust automatic activity detection for a multi-camera streaming video environment. Activities will be enriched by person and object detection. DIVA will address activity detection for both forensic applications and for real-time alerting.

The DIVA program intends to develop robust automated activity detection for a multi-camera streaming video environment. As an essential aspect of DIVA, activities will be enriched by person and object detection, as well as recognition at multiple levels of granularity. DIVA is anticipated to be a three-phase program. The program will focus on three major thrusts throughout all phases:

- Detection of primitive activities occurring in ground-based video collection; Examples include:

- Person getting into a vehicle,

- Person getting out of vehicle,

- Person carrying object.

- Detection of complex activities, including pre-specified or newly defined activities; Examples:

- Person being picked up by vehicle,

- Person abandoning object,

- Two people exchanging an object,

- Person carrying a firearm.

- Person and object detection and recognition across multiple overlapping and nonoverlapping camera viewpoints.

The focus for phase 1 will be on video collected with the following properties:

- Video collected within the human visible light spectrum;

- Video collected from indoor or outdoor security cameras, either fixed or with rigid motion such as pan-tilt-zoom.

In phases 2 and 3, additional data used will include:

- Video collected from handheld or body worn cameras;

- Video collected from other portions of the electromagnetic spectrum (e.g., infrared).

The DIVA program will produce a common framework and software prototype for activity detection, person/object detection and recognition across a multi-camera network. The impact will be the development of tools for forensic analysis, as well as real-time alerting for userdefined threat scenarios.

Collaborative efforts and teaming among potential performers will be encouraged. It is anticipated that teams will be multidisciplinary, and might include expertise in machine learning, deep learning or hierarchical modeling, artificial intelligence, object detection, recognition, person detection and re-identification, person action recognition, video activity detection, tracking across multiple non-overlapping camera viewpoints, 3D reconstruction from video, super-resolution, statistics, probability and mathematics. Performers will not be asked to build a monolithic system for activity detection and tracking across a large camera network. Instead, research will focus on developing a common scalable framework that deploys in an open cloud architecture for activity detection, person/object detection and recognition across overlapping and non-overlapping cameras.

IARPA anticipates that academic institutions and companies from around the world will participate in this program. Researchers will be encouraged to publish their findings in academic journals.

Project Info