Egocentric Video Registration and Architectural Details Retrieval

Wearable devices and first person camera views are key to enhance tourists' cultural experiences in unconstrained outdoor environments. In these works we mainly focus on two topics: video registration and architectural details retrieval. The former is the task of precisely localizing the user (the 6 degrees-of-freedom of the camera he wears) with respect to a world reference system, while the latter deals with the unsupervised retrieval of the relevant details that cultural sites present.

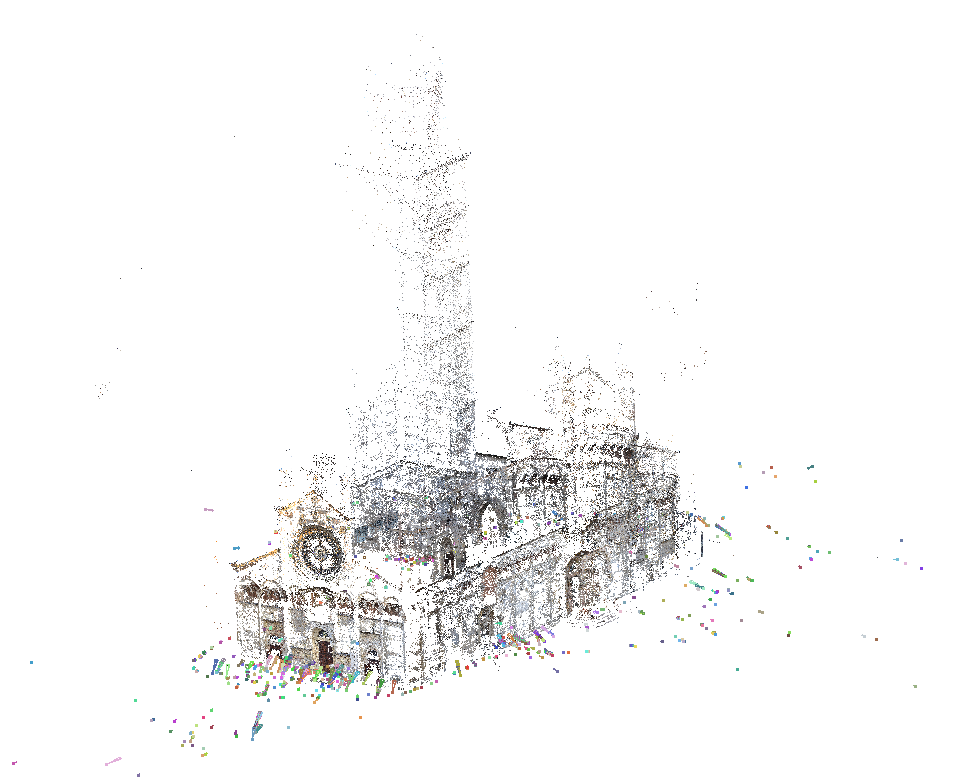

Given the recent advancements in 3D reconstruction and structure from motion (SfM), it is now possible to exploit rich 3D information to enhance tourists' cultural experiences. The first step in building an interactive application is to obtain precise user localization. Due to the high precision requirements of such an application, GPS is not suitable since it provides an error up to 10 meters and can result in misplacing the tourist significantly.

Exploiting a video sequence acquired by a wearable camera tied to the tourist it is possible, through video registration, to obtain a precise user localization in terms of rotation and translation matrices (6 degrees of freedom) of the camera with regard to a known environment. We hence focus our research efforts into developing novel techniques to register the egocentric video sequence to a pre-built SfM model. In particular, due to the unconstrained ego-vision scenarios, videos can be acquired during the night where the matching with a 3D model based on SIFT descriptors is not feasible due to poor matching performance.

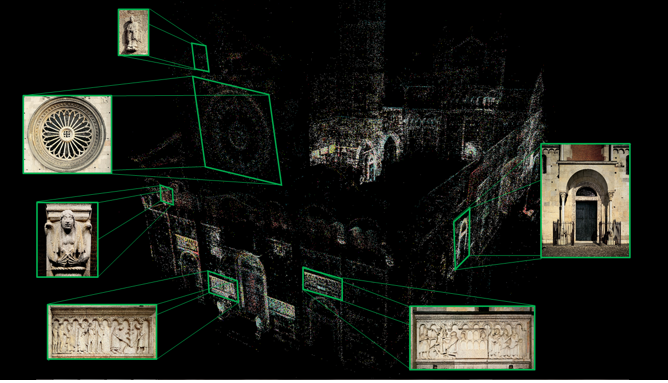

To develop an interactive application for augment the users' experience, once a precise localization is obtained the relevant details have to be retrieved from the surroundings.

To perform such retrieval, once a query image has been registered to the SfM model, 2D-3D correspondences are exploited to reproject details information from the 3D point cloud to the query image. To satisfy the real-time requirements of this phase, we propose to cluster descriptors in a Bag-of-Words fashion and to further reduce the resulting feature vector using principal component analysis. In fact, KD-Trees are the most widely used data structures used to speed-up the correspondence search but suffer from worst-case scenario performance when dealing with high-dimensional data points such as SIFT descriptors.

To be able to reproject details from the 3D point cloud on query images. a detail discovery algorithm has to be used to label 3D points and associate them with relevant architectural details. By computing the normal to each point of the cloud at different scales, it is possible to filter out planar regions and keep only the points presenting geometrical discontinuities. Clustering this result we obtain a set of candidates that are then scored in a socially devised way. In particular, exploiting the fact that relevant tourists commonly picture details while discontinuities that are not due to the presence of a detail are not often portrayed, we refine the clustering results and label the points on the 3D cloud according to the detail identified.

Publications

| 1 |

Alletto, Stefano; Abati, Davide; Serra, Giuseppe; Cucchiara, Rita

"Exploring Architectural Details Through aWearable Egocentric Vision Device"

SENSORS,

vol. 16(2),

pp. 1

-15

,

2016

| DOI: 10.3390/s16020237

Journal

|