Hand Monitoring and Gesture Recognition for Human-Car Interaction

Gesture-based human-computer interaction is a well-assessed field of the computer vision research. Our studies are focused on gesture recognition in the automotive field.

Our main goal is the development of a dynamic hand gesture-based system for the human-vehicle interaction able to reduce the driver distraction.

To recognize a gesture, it is necessary to firstly detect the hand in a given image. Then, the performed gesture is analyzed and a gesture class is predicted, allowing a smooth communication between the driver and the car.

Hand Detection and Tracking

The study of hand detection and tracking has been widely studied in the computer vision community. In particular, hand motion analysis is an interesting topic in automotive context, due to the fact that the hands are a crucial body part to study the motion, the interaction between human and the environment, the car, and the behavior.

Besides, the rising trend of vision-based systems on the car to look both outsides and inside a car gives the opportunity to have a huge amount of data and new ways to acquire images during driving activity.

An important aspect is that distracting driving represents a crucial role in road crashes. Three categories for driving distraction are present:

- Manual distraction: driver's hands are on the wheel;

- Visual distraction: driver's eyes are not looking at the road;

- Cognitive distraction: driver attention is not focused on driving activity.

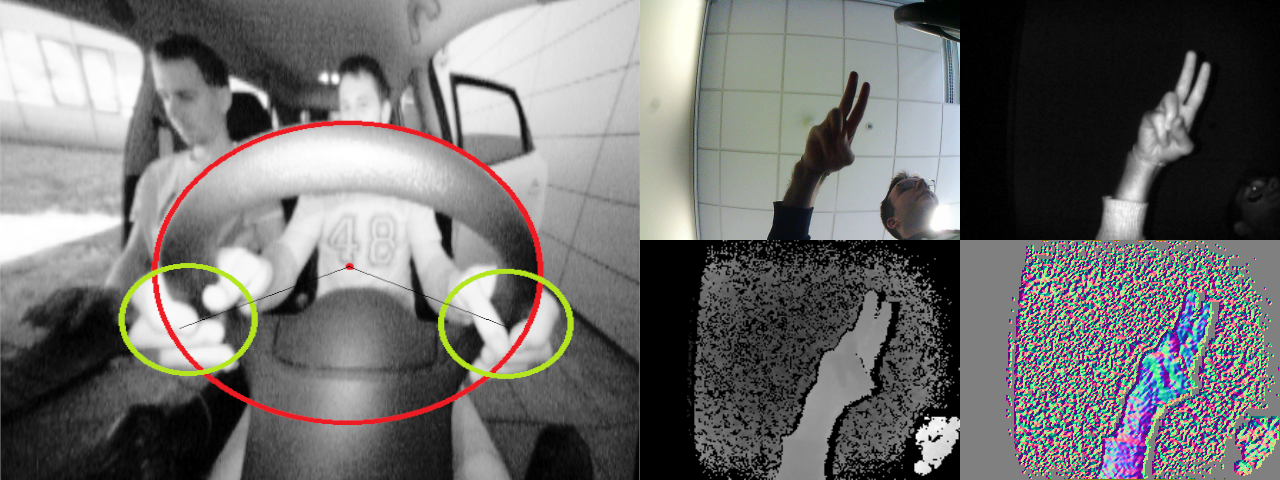

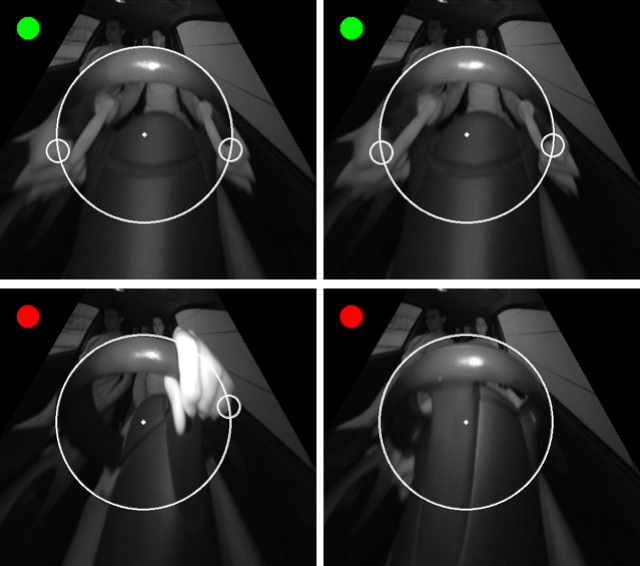

In this research project, we focused on the first one. We believe the hand position is a key element to check driver attention and behavior: according to the National Safety Administration (NHTSA), that defines the driving distraction as "an activity that could divert a person's attention away from the primary task of driving", we assume that hand have to grasp steadily during all the driving activity, apart from some short and well time delimited events as shifting gears, adjusting rear-view mirror and so on.

After all, for example, the smartphone is one of the most important causes of fatal driving crashes: it causes about 18% of fatal driver accidents in North America and involves all three distraction categories mentioned above. Besides, drivers today are increasingly engaged in secondary tasks behind the wheel.

For these reasons, we aim to propose a method that is able to understand if the driver's hands are next to or on the wheel. To do this, we place a particular infrared camera (Leap Motion) in a particular position.

Hands on the wheel: a Dataset for Driver Hand Detection and Tracking, TURMS Dataset

G. Borghi, E. Frigieri, R. Vezzani, R. Cucchiara

Human Behaviour Understanding @ International Conference on Face and Gesture, HBU 2018 - FG 2018

Dynamic Hand Gesture Recognition

Given a video, the recognition of a dynamic gesture during the execution of it is a well-known task for the computer vision world. The hand gesture recognition task is commonly tackled through the use of architectures that are able to model the temporal and sequential nature of dynamic gestures, like RNNs, LSTMs, or 3D CNNs.

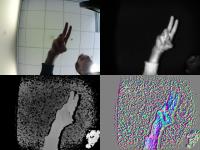

Furthermore, the introduction of affordable RGB-D devices, coupling RGB cameras and active depth sensors, has attracted the interest of the research community. Indeed, the ability to recognize hand gestures without the use of contact-based sensors and even in low-light or dark environments is an enabling and crucial element.

Few public datasets present such combination of data and the number is even lower considering datasets focused on the automotive context, e.g. datasets recorded inside the car or a car cockpit.

Therefore, we acquired Briareo, a multimodal dataset of dynamic hand gestures consisting of RGB, depth and infrared data types. 12 different gestures, designed for the human-car interaction, are performed by 40 subjects in a car cockpit.

After the dataset acquisition, we also studied how combining different data can boost the accuracy of gesture recognition models.

Paper

Hand Gestures for the Human-Car Interaction: the Briareo dataset

F. Manganaro, S. Pini, G. Borghi, R. Vezzani, R. Cucchiara

International Conference on Image Analysis and Processing, ICIAP 2019

The recent spread of attentive models, characterized by the use of self-attention mechanisms, has come with the introduction of the Transformer module, which can replace traditional recurrent modules.

In our last work, we propose a method to classify dynamic hand gestures based on the Transformer architecture, even if it was originally developed for the machine translation and language modeling tasks.

In addition, we propose the combined use of depth maps and surface normals (estimated from them) as unique sources to successfully solve the task, even in low-light conditions.

We show that the proposed architecture obtains state-of-the-art results, is suitable for late-fusion multimodal combination and can run in real-time.

Paper

A Transformer-Based Network for Dynamic Hand Gesture Recognition

A. D’Eusanio, A. Simoni, S. Pini, G. Borghi, R. Vezzani, R. Cucchiara

International Conference on 3D Vision, 3DV 2020

Publications

| 1 |

D’Eusanio, Andrea; Simoni, Alessandro; Pini, Stefano; Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"A Transformer-Based Network for Dynamic Hand Gesture Recognition"

2020 International Conference on 3D Vision (3DV 2020),

Online,

pp. 623

-632

,

25-28 November 2020,

2020

| DOI: 10.1109/3DV50981.2020.00072

Conference

|

| 2 |

D’Eusanio, Andrea; Simoni, Alessandro; Pini, Stefano; Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Multimodal Hand Gesture Classification for the Human-Car Interaction"

INFORMATICS,

vol. 7,

pp. 1

-16

,

2020

| DOI: 10.3390/informatics7030031

Journal

|

| 3 |

Manganaro, Fabio; Pini, Stefano; Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Hand Gestures for the Human-Car Interaction: the Briareo dataset"

Proceedings of the 20th International Conference on Image Analysis and Processing,

vol. 11752,

Trento, Italy,

pp. 560

-571

,

9-13 September 2019,

2019

| DOI: 10.1007/978-3-030-30645-8_51

Conference

|

| 4 |

Caputo, F. M.; Burato, S.; Pavan, G.; Voillemin, T.; Wannous, H.; Vandeborre, J. P.; Maghoumi, M.; Taranta, E. M.; Razmjoo, A.; J. J. Laviola Jr., ; Manganaro, Fabio; Pini, S.; Borghi, G.; Vezzani, R.; Cucchiara, R.; Nguyen, H.; Tran, M. T.; Giachetti, A.

"SHREC 2019 Track: Online Gesture Recognition"

Eurographics Workshop on 3D Object Retrieval,

vol. 160897,

Genova,

pp. 93

-102

,

5-6 May 2019,

2019

| DOI: 10.2312/3dor.20191067

Conference

|

| 5 |

Borghi, Guido; Frigieri, Elia; Vezzani, Roberto; Cucchiara, Rita

"Hands on the wheel: a Dataset for Driver Hand Detection and Tracking"

Proceedings of the 8th International Workshop on Human Behavior Understanding (HBU),

Xi'An,

pp. 564

-570

,

15 May 2018,

2018

| DOI: 10.1109/FG.2018.00090

Conference

|

| 6 |

Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Fast gesture recognition with Multiple StreamDiscrete HMMs on 3D Skeletons"

Proceedings of the 23rd International Conference on Pattern Recognition,

Cancun,

pp. 997

-1002

,

Dec 4-8, 2016,

2016

| DOI: 10.1109/ICPR.2016.7899766

Conference

|