Dr(eye)ve a Dataset for Attention-Based Tasks with Applications to Autonomous Driving

Autonomous and assisted driving are undoubtedly hot topics in computer vision. However, the driving task is extremely complex and a deep understanding of drivers’ behavior is still lacking. Several researchers are now investigating the attention mechanism in order to define computational models for detecting salient and interesting objects in the scene.

Nevertheless, most of these models only refer to bottom up visual saliency and are focused on still images. Instead, during the driving experience the temporal nature and peculiarity of the task influence the attention mechanisms, leading to the conclusion that real life driving.

We created a noveland publicly available dataset acquired during actual driving.

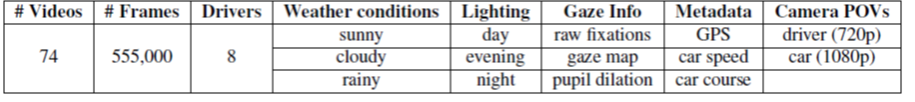

Our dataset, composed by more than 500,000 frames, contains drivers’ gaze fixations and their temporal integration providing task-specific saliency maps. Geo-referenced locations, driving speed and course complete the set of released data.

To the best of our knowledge, this is the first publicly available dataset of this kind and can foster new discussions on better understanding, exploiting and reproducing the driver’s attention process in the autonomous and assisted cars of future generations.

At a computational level, human attention and eye fixation are typically modeled through the concept of visual saliency. Most of the literature on visual saliency focuses on filtering, selecting and synthesizing task dependent features for automatic object recognition. Nevertheless, the majority of experiments are constructed in controlled environments (e.g. laboratory settings) and on sequences of unrelated images.

Conversely, our dataset has been collected “on the road” and it exhibits the following features:

- It is public and open. It provides hours of driving videos that can be used for understanding the attention phenomena;

- It is task and context dependent. According to the psychological studies on attention, data are collected during a real driving experience thus being as much realistic as possible;

- It is precise and scientifically solid. We use high end attention recognition instruments, in conjunction with camera data and GPS information.

Learn More and Download Dr(eye)ve Dataset here

Publications

| 1 |

Palazzi, Andrea; Abati, Davide; Calderara, Simone; Solera, Francesco; Cucchiara, Rita

"Predicting the Driver's Focus of Attention: the DR(eye)VE Project"

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,

vol. 41,

pp. 1720

-1733

,

2019

| DOI: 10.1109/TPAMI.2018.2845370

Journal

|

| 2 |

Alletto, Stefano; Palazzi, Andrea; Solera, Francesco; Calderara, Simone; Cucchiara, Rita

"DR(eye)VE: a Dataset for Attention-Based Tasks with Applications to Autonomous and Assisted Driving"

IEEE Internation Conference on Computer Vision and Pattern Recognition Workshops (CVPRW),

Las Vegas,

2016,

2016

| DOI: 10.1109/CVPRW.2016.14

Conference

|