Learning to Map Vehicles into Bird's Eye View

Awareness of the road scene is an essential component for both autonomous vehicles and Advances Driver Assistance Systems and its relevance is growing both in academic research fields and in car companies.

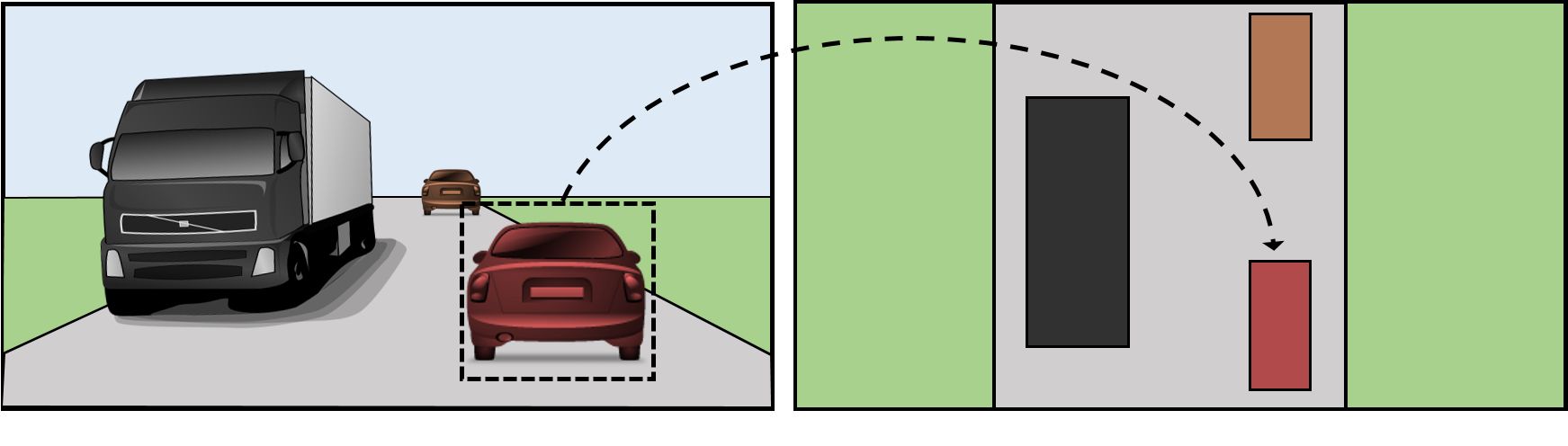

This paper presents a way to learn a semantic-aware transformation which maps detections from a dashboard camera view onto a broader bird's eye occupancy map of the scene. To this end, a huge synthetic dataset featuring couples of frames taken from both dashboard and bird's eye view in driving scenarios is collected: more than 1 million examples are automatically annotated. A deep-network is then trained to warp detections from the first to the second view. We demonstrate the effectiveness of our model against several baselines and observe that is able to generalize on real-world data despite having been trained solely on synthetic data.

Vision-based algorithms and models have massively been adopted in current generation ADAS solutions. Moreover, recent research achievements on scene semantic segmentation, road obstacle detection and driver's gaze, pose and attention prediction are likely to play a major role in the rise of autonomous driving.

Three major paradigms can be individuated for vision-based autonomous driving systems: mediated perception approaches, based on the total understanding of the scene around the car, behavior reflex methods, in which driving action is regressed directly from the sensory input, and direct perception techniques, that fuse elements of previous approaches and learn a mapping between the input image and a set of interpretable indicators which summarize the driving situation.

Following this last line of work, in this paper we develop a model for mapping vehicles across different views. In particular, our aim is to warp vehicles detected from a dashboard camera view into a bird's eye occupancy map of the surroundings, which is an easily interpretable proxy of the road state. Being almost impossible to collect a dataset with this kind of information in real-world, we exclusively rely on synthetic data for learning this projection.

We aim to create a system close to surround vision monitoring systems, also called around view cameras that can be useful tools for assisting driver during driving operations by, for example, performing trajectory analysis of vehicles out from own visual field.

In this framework, our contribution is twofold:

- We make available a huge synthetic dataset (> 1 million of examples) which consists of couple of frames corresponding to the same driving scene captured by two different views. Besides the vehicle location, auxiliary information such as the distance and yaw of each vehicle at each frame are also present.

Click here for dataset download page.

- We propose a new method for generating bird's eye occupancy maps of the surround in the context of autonomous and assisted driving. Our approach does not require a stereo camera, nor more sophisticated sensors like radar and lidar. Conversely, we learn how to project detections from the dashboard camera view to onto a broader bird's eye view of the scene. To this aim we combine learned geometric transformation and visual cues that preserve objects size and orientation in the warping procedure.