Video synthesis from Intensity and Event Frames

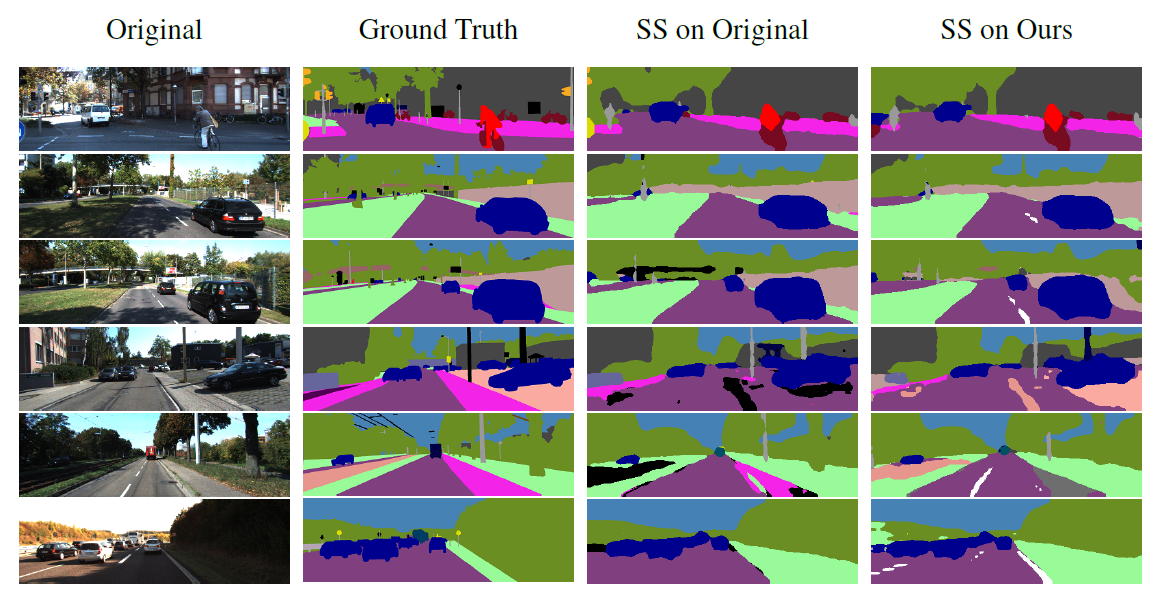

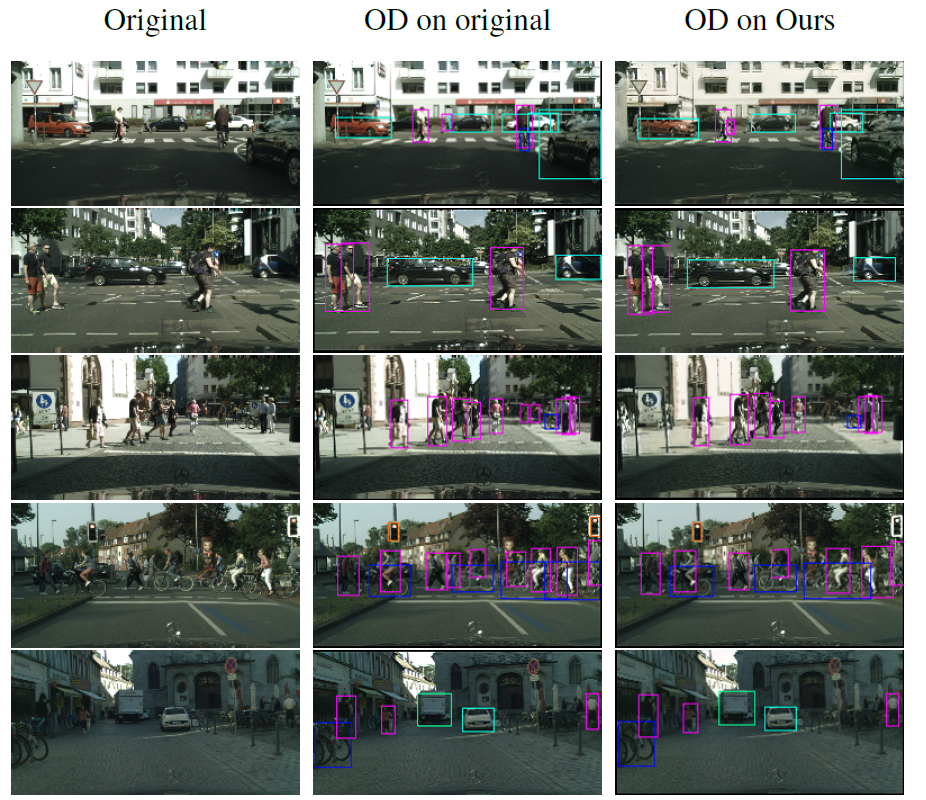

Event Cameras, neuromorphic devices that naturally respond to brightness changes, have multiple advantages with respect to traditional cameras. The difficulty of applying traditional computer vision algorithms on event data, such as Semantic Segmentation and Object Detection, limits their usability.

Therefore, we investigate the use of a deep learning-based architecture that combines an initial grayscale frame and a series of event data to estimate the following intensity frames. We evaluate the proposed approach on a public automotive dataset, providing a fair comparison with a state-of-the-art approach.

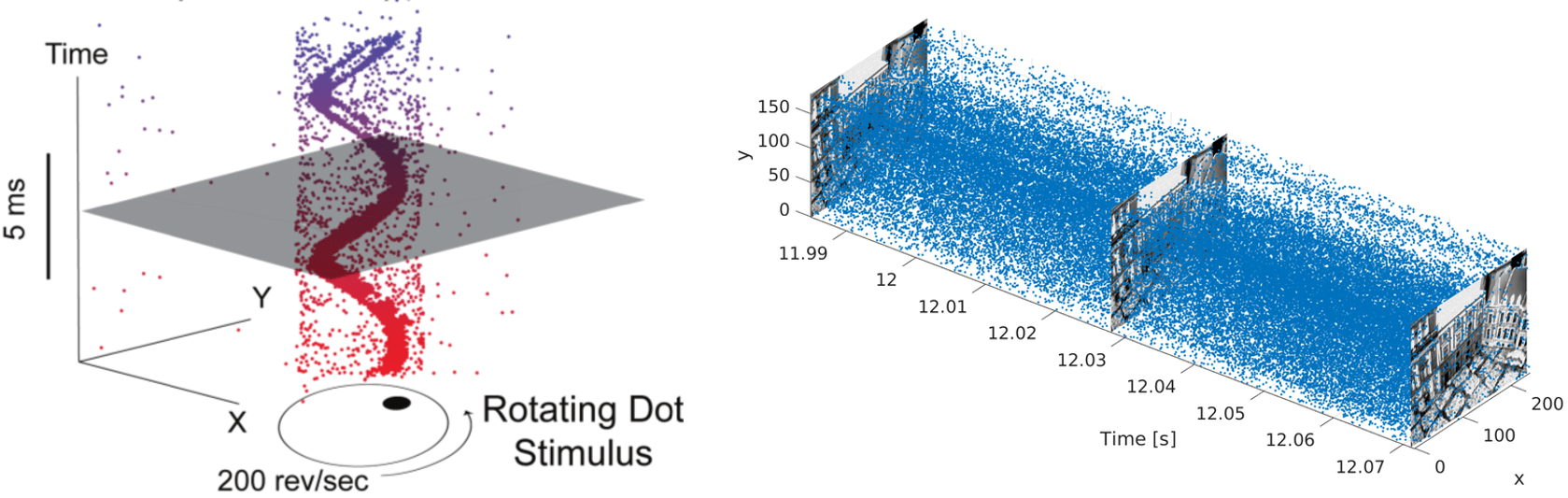

Event Cameras

Event cameras are optical sensors that asynchronously output events in case of brightness variations at pixel level. The major advantages of this type of neuromorphic sensors are:

- Low power consumption

- Low data rate

- High temporal resolution

- High dynamic range

Datasets

Due to the recent commercial release of event cameras, only few event-based datasets are currently publicly-released and available in the literature. These datasets still lack the data variety and the annotation quality which is common for RGB datasets. These considerations have motivated us to exploit the logarithmic difference of RGB images in order to take advantage of non-event public automotive datasets, which are richer in terms of annotations and data quality, along with two recent event-based automotive datasets:

- End-to-end DAVIS Driving Dataset (DDD17): the DDD17 is the first open dataset of annotated event driving recordings.

The dataset is captured by a DAVIS sensor and includes both gray-level frames and event data. Sequences are captured in urban and highway scenarios, during day and night and under different weather conditions. - Multi Vehicle Stereo Event Camera Dataset (MVSEC): the dataset contains data acquired from four different vehicles, in both indoor and outdoor environments, during day and night, using a pair of DAVIS 346B event cameras, a stereo camera, and a Velodyne lidar.

- Kitti Vision Benchmark Suite (Kitti): in this work, we use the KITTI raw subset, which includes 6 hours of RGB image sequences captured on different road scenarios with a temporal resolution of 10Hz. The dataset is rich of annotations, as depth maps and semantic segmentation.

- Cityscapes: this dataset consists of thousands of RGB frames with a high spatial resolution and shows varying and complex scene layouts and backgrounds. Fine and coarse annotations of 30 different object classes are provided as both semantic and instance-wise segmentation. We select a particular subset, namely, following official splits, in order to use sequences with a frame rate of 17Hz and to have access to fine semantic segmentation annotations.

Proposed Method

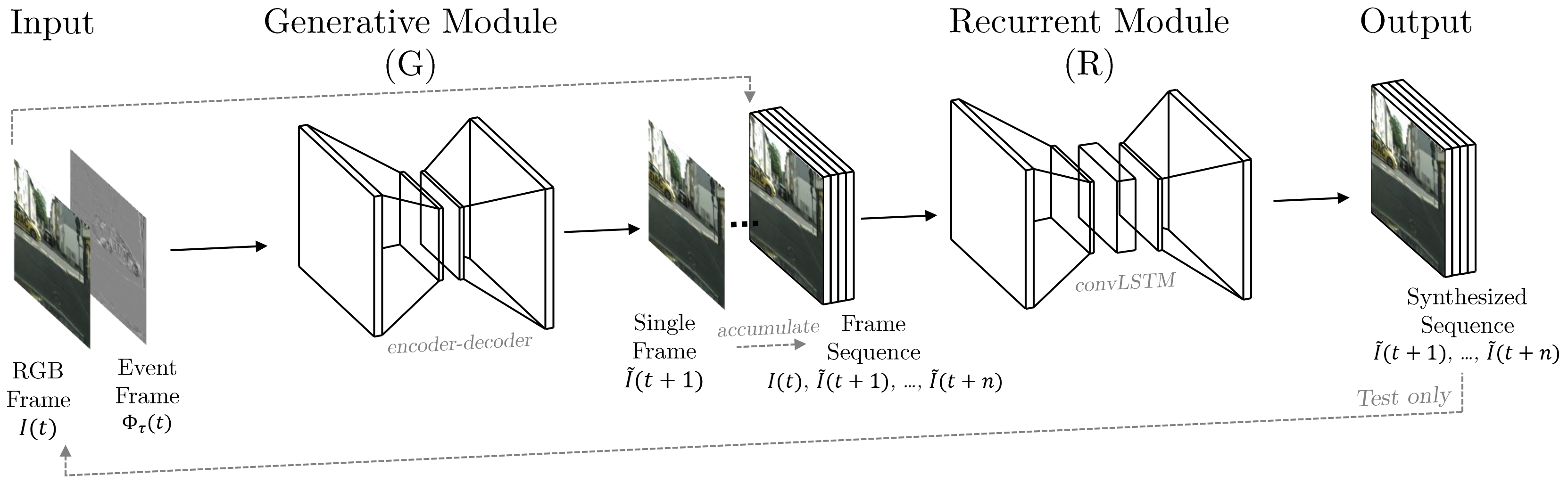

We explore the use of a conditional adversarial network in conjunction with a recurrent module to estimate RGB frames, relying on an initial or a periodic set of color key-frames and a sequence of event frames, i.e. frames that collect events occurred in a certain amount of time.

Moreover, we propose to use simulated event data, obtained by means of image differences, to train our model: this solution leads to two significant advantages. First, event-based methods can be evaluated on standard datasets with annotations, which are often not available in the event domain. Second, learned models can be trained on simulated event data and used with real event data, unseen during the training procedure.

In general, we propose to shift from event-based context to a domain where more expertise is available in terms of mature vision algorithms.

As a case study, we embrace the automotive context, in which event and intensity cameras could cover a variety of applications. For instance, the growing number of high-quality cameras placed on recent cars implies the use of a large bandwidth in the internal and the external network: sending only key-frames and events might be a way to reduce the bandwidth requirements, still maintaining a high temporal resolution.

Application with mature vision algorithms (Semantic Segmentation and Object Detection)

For further details and high quality images, please read the paper and the supplementary material.

Publications

| 1 |

Pini, Stefano; Borghi, Guido; Vezzani, Roberto

"Learn to See by Events: Color Frame Synthesis from Event and RGB Cameras"

Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications,

vol. 4,

Valletta (Malta),

pp. 37

-47

,

27-29 February 2020,

2020

| DOI: 10.5220/0008934700370047

Conference

|

| 2 |

Pini, Stefano; Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Video synthesis from Intensity and Event Frames"

Proceedings of the 20th International Conference on Image Analysis and Processing,

Trento, Italy,

9-13 September 2019,

2019

| DOI: 10.1007/978-3-030-30642-7_28

Conference

|