Research on Computer Vision and Pattern Recognition

Visualization Techniques for Explainable AI

Explaining neural network predictions can increase the transparency of models and help to justify correct and incorrect outputs in a human-friendly way. While there have been diverse attempts to provide explanations about the inference process in different forms, the Class Activation Mapping (CAM) approach has proven to be particularly effective in providing visual explanations by taking a weighted combination of activation maps from a convolutional layer. We focused on the enhancement of the evaluation and reproducibility of such approaches. To this aim, we designed a novel evaluation protocol to quantify explanation maps, which show better effectiveness and simplifies comparisons between approaches. Our proposed score, named ADCC, overcomes the limitation of existing metrics and jointly considers the correctness, coherency, and complexity of the explanation maps in a single-valued metric. Experiments have been conducted on five different CAM-based visualization methods and six backbones and have testified the appropriateness of the proposal its generality across different settings.

Connected Components Labeling

In 2010, research activity on Connected Component Labeling (CCL) algorithms leaded to the development of an open source version of a very fast labeling routine called Block Based with Decision Trees (BBDT). Recently, our research group released YACCLAB (Yet Another Connected Component Labeling Algorithm Benchmark) an open source CCL performance evaluation benchmark which compare the performance of the state of the art labeling algorithms. The benchmarking system confirms the very good performances of the BBDT algorithm which is now the default algorithm included in the OpenCV library.

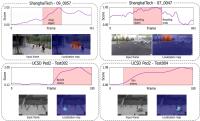

Visual Saliency Prediction

When human observers look at an image, attentive mechanisms drive their gazes towards salient regions. Emulating such ability has been studied for more than 80 years by neuroscientists and by computer vision researchers, while only recently, thanks to the large spread of deep learning, saliency prediction models have achieved a considerable improvement. Motivated by the importance of automatically estimating the human focus of attention on images, we developed two different saliency prediction models which have overcome previous methods by a big margin winning the LSUN Saliency Challenge at CVPR17, Honolulu, Hawaii.

Animal welfare analysis from 3D sensors

Stray dog populations represent a serious concern for human beings health and safety and for dogs themselves in many European countries [1]. The main population control action plan in Italy, but also in other countries, is the confinement of stray dogs in shelter facilities until re-homing. Unfortunately, the impairment between entrance and adoption rates often leads to an overcrowded scenario where dogs are likely to spend most part of their lives. We propose a computer vision system for the evaluation of the behaviour and the well-being of the dogs housed in these shelters.

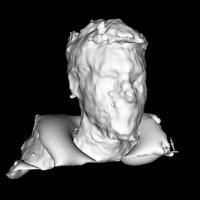

3D Computer Vision

Three-dimensional object modeling and recognition on range data and 3D point clouds is becoming more important nowadays. We are exploring and studying new solutions and algorithms based on 3D point clouds, ranging from industrial applications to human computer interaction.

Novelty Detection

Novelty detection refers to the identification of events that do not conform to expected behavior. Standard classification settings are infeasible for the goal since the nature of novel examples cannot be known a priori. For such reason, we tackle the problem in a semi-supervised learning setting. We are interested in the formalization and assessment of novel models capable of learning the distribution of regular events and deem as novel the ones which are less explicable in a probabilistic sense.