Research on Embodied AI

Personalized Instance-based Navigation

In the last years, the research interest in visual navigation towards objects in indoor environments has grown significantly. This growth can be attributed to the recent availability of large navigation datasets in photo-realistic simulated environments, like Gibson and Matterport3D. However, the navigation tasks supported by these datasets are often restricted to the objects present in the environment at acquisition time. Also, they fail to account for the realistic scenario in which the target object is a user-specific instance that can be easily confused with similar objects and may be found in multiple locations within the environment. To address these limitations, we propose a new task denominated Personalized Instance-based Navigation (PIN), in which an embodied agent is tasked with locating and reaching a specific personal object by distinguishing it among multiple instances of the same category. The task is accompanied by PInNED, a dedicated new dataset composed of photo-realistic scenes augmented with additional 3D objects. In each episode, the target object is presented to the agent using two modalities: a set of visual reference images on a neutral background and manually annotated textual descriptions. Through comprehensive evaluations and analyses, we showcase the challenges of the \shorttask task as well as the performance and shortcomings of currently available methods designed for object-driven navigation, considering modular and end-to-end agents.

Embodied Vision-and-Language Navigation

Effective instruction-following and contextual decision-making can open the door to a new world for researchers in embodied AI. Deep neural networks have the potential to build complex reasoning rules that enable the creation of intelligent agents, and research on this subject could also help to empower the next generation of collaborative robots. In this scenario, Vision-and-Language Navigation (VLN) plays a significant part in current research. This task requires to follow natural language instructions through unknown environments, discovering the correspondences between lingual and visual perception step by step. Additionally, the agent needs to progressively adjust navigation in light of the history of past actions and explored areas. Even a small error while planning the next move can lead to failure because perception and actions are unavoidably entangled; indeed, "we must perceive in order to move, but we must also move in order to perceive". For this reason, the agent can succeed in this task only by efficiently combining the three modalities - language, vision, and actions.

Self-Supervised Navigation and Recounting

Advances in the field of embodied AI aim to foster the next generation of autonomous and intelligent robots. At the same time, tasks at the intersection of computer vision and natural language processing are of particular interest for the community, with image captioning being one of the most active areas. By describing the content of an image or a video, captioning models can bridge the gap between the black-box architecture and the user. In this project, we propose a new task at the intersection of embodied AI, computer vision, and natural language processing, and aim to create a robot that can navigate through a new environment and describe what it sees. We call this new task Explore and Explain since it tackles the problem of joint exploration and captioning. In this schema, the agent needs to perceive the environment around itself, navigate it driven by an exploratory goal, and describe salient objects and scenes in natural language. Beyond navigating the environment and translating visual cues in natural language, the agent also needs to identify appropriate moments to perform the explanation step.

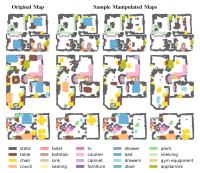

Embodied Agents for Differences Discovery in Dynamic Environments

Imagine you have just bought a personal robot, and you ask it to bring you a cup of tea. It will start roaming around the house while looking for the cup. It probably will not come back until some minutes, as it is new to the environment. After the robot knows your house, instead, you expect it to perform navigation tasks much faster, exploiting its previous knowledge of the environment while adapting to possible changes of objects, people, and furniture positioning. As agents are likely to stay in the same place for long periods, such information may be outdated and inconsistent with the actual layout of the environment. Therefore, the agent also needs to discover those differences during navigation.

Acquisition of 3D Environments for Robotic Navigation

Matterport technology allows to create a “digital twin” of a physical indoor space. Thanks to the Matterport Pro2 camera, it is easy to acquire 3D information from an environment and to create a virtual space in which to train embodied agents. Thanks to this, the recently proposed Matterport3D dataset of spaces has attracted a lot of interest form the research community. Our aim is to acquire new environments, and we are particularly interested in places related with Italian Cultural Heritage. In this project, we present the 3D reconstruction of the Galleria Estense of Modena.