SARC3D

A new dataset for people reidentification in videos

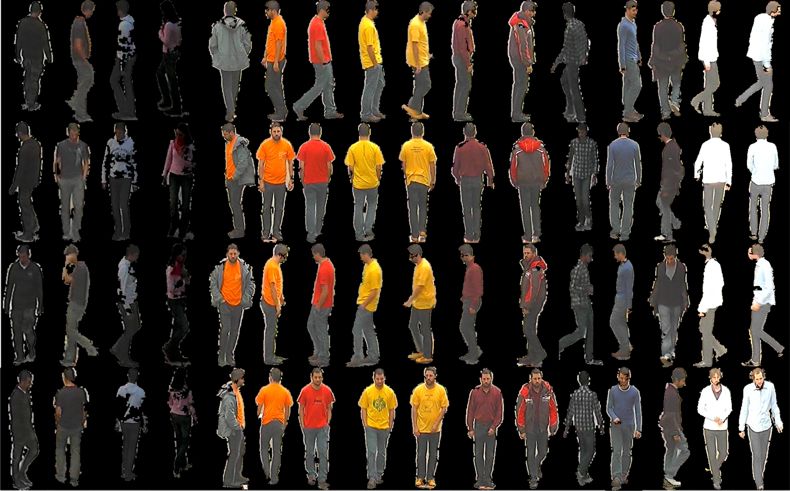

| In order to effectively test the SARC3D method, we created a new dataset with 50 people, consisting of short video clips captured with a calibrated camera. To simplify the model to image alignment, we manually selected four frames for each clip corresponding to predefined positions and postures of the people. Thus the annotated data set is composed by four views for each person, 200 snapshots in total. Some examples are shown in the Figure, where the output of the foreground segmentation is reported. |

Download:

For problems or suggestions: contact Roberto Vezzani - Webmaster: Roberto Vezzani - ©2007