Cineca SuperComputing Resource Allocation

Deep Learning has gained a lot of attention in the Computer Vision and Machine Learning community, due to its overwhelming performance in several image and video related tasks, such as image classification, object detection, semantic segmentation and action recognition. Algorithms that follow this approach employ large-scale deep neural networks to learn patterns from huge amounts of data, and require modern GPU architectures in order to keep training times acceptable. In this context, we are developing and testing innovative deep learning architectures under three lines of research, which can be summarized as follows.

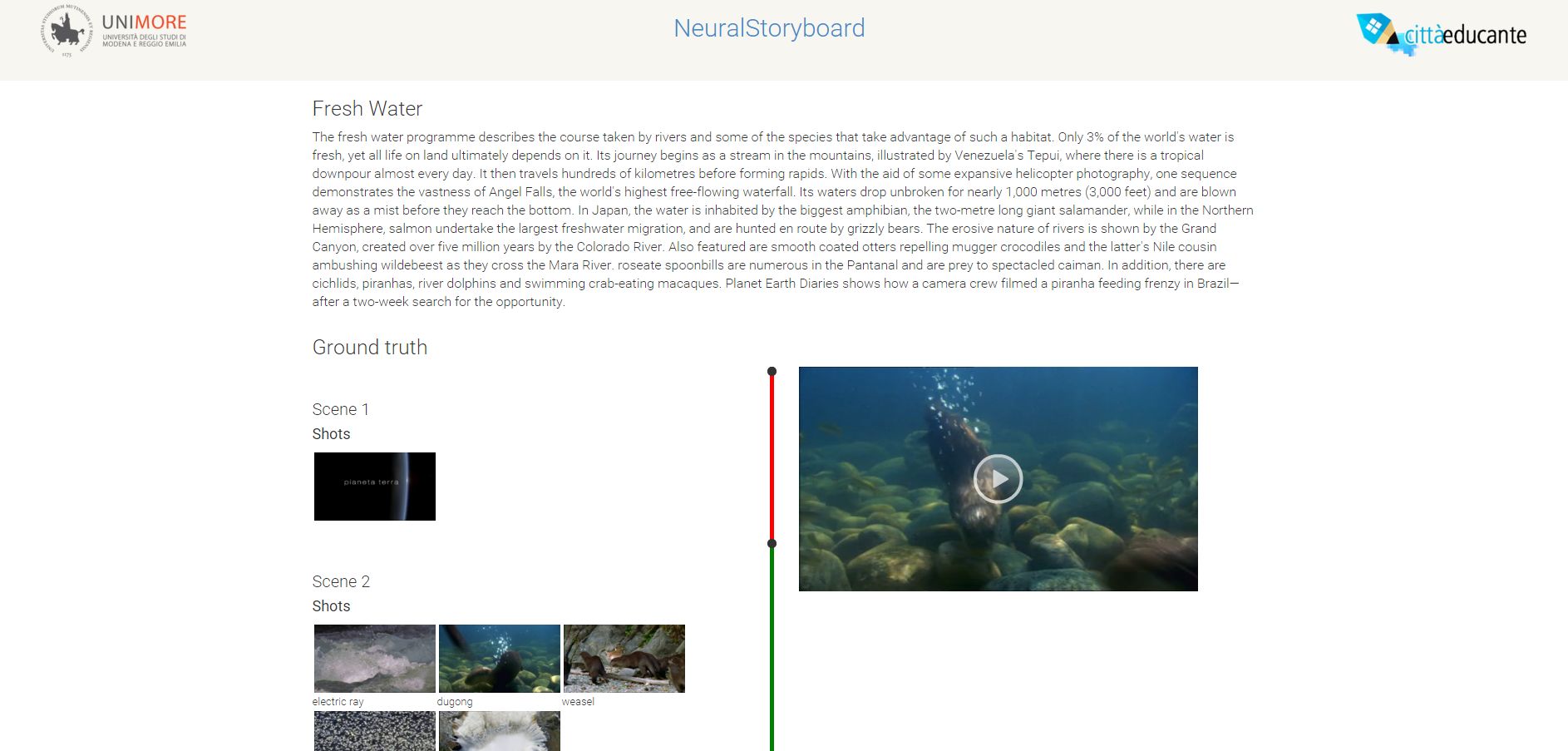

Video annotation and semantic concept extraction for automatic video production

Following the results of the ISCRA project "DeepVid", we aim at developing algorithms for automatic video annotation, in support of services for semi-automated video production. This will enable the reuse of existing video footage, and will also enhance the retrieval inside video collections. One powerful mean to automatic annotation will be that of video captioning, which can generate short descriptions of an input video. Until now, video captioning has been addressed by relying on visual features only, and applied to simple and general purpose user-generated videos. The project will explore the development of new multi-modal captioning algorithms through CNNs with LSTM (long-short term memory) architectures, to support video harvesting and retrieval.

Gaze prediction for context and actions understanding

A second line of research will be that of gaze and saliency prediction. When humans observe a scene, attentional mechanisms attract their gazes on salient regions and actions. Emulating such ability has been studied for more than 80 years by neuroscientists and computer vision researchers, and thanks to deep learning methods, saliency algorithms have shown impressive results on images, but have rarely been applied to videos. In the project, gaze prediction algorithms, trained on huge datasets, will be developed with focus on to two different application domains: that of predicting the popularity and engagement of a video, to assist the video production process, and that of monitoring the attentive levels of drivers, to develop assistive in-vehicle technologies.

Deep Learning for driver monitoring

The ability to monitor the behavior of driver and passengers is fundamental to enable autonomous driving, especially in a transition phase characterized by the coexistence of traditional and autonomous driving cars. In this context, a non-intrusive monitoring vision system that operates with internal cameras is required. We will develop frameworks specifically designed for real time drive monitoring, with a particular regard to the real-time estimation of the pose of the head and the upper part of the driver torso. Head pose estimation, a fundamental element for attention analysis, gaze tracking, Human Computer Interaction, will be a concrete via to monitor driver attention. Driver body posture will also be investigated, since the driver may change posture in preparation for driving actions or due to physical accidents.

A relevant scientific impact is expected for the proposed research directions, both in international conferences and in top class journals, like IEEE Transactions on PAMI, IJCV, Pattern Recongition, CVPR, ICCV, ACM Multimedia.

Publications

| 1 |

Baraldi, Lorenzo; Grana, Costantino; Cucchiara, Rita

"Recognizing and Presenting the Storytelling Video Structure with Deep Multimodal Networks"

IEEE TRANSACTIONS ON MULTIMEDIA,

vol. 19,

pp. 955

-968

,

2017

| DOI: 10.1109/TMM.2016.2644872

Journal

|

| 2 |

Baraldi, Lorenzo; Grana, Costantino; Cucchiara, Rita

"Hierarchical Boundary-Aware Neural Encoder for Video Captioning"

Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on,

vol. 2017-,

Honolulu, Hawaii,

pp. 3185

-3194

,

July, 22-25,

2017

| DOI: 10.1109/CVPR.2017.339

Conference

|

| 3 |

Cornia, Marcella; Baraldi, Lorenzo; Serra, Giuseppe; Cucchiara, Rita

"Visual Saliency for Image Captioning in New Multimedia Services"

Multimedia & Expo Workshops (ICMEW), 2017 IEEE International Conference on,

Hong Kong,

pp. 309

-314

,

July 10-14, 2017,

2017

| DOI: 10.1109/ICMEW.2017.8026277

Conference

|

| 4 |

Baraldi, Lorenzo; Grana, Costantino; Cucchiara, Rita

"NeuralStory: an Interactive Multimedia System for Video Indexing and Re-use"

Proceedings of the 15th International Workshop on Content-Based Multimedia Indexing,

Florence, Italy,

19-21 June 2017,

2017

| DOI: 10.1145/3095713.3095735

Conference

|

| 5 |

Baraldi, Lorenzo; Grana, Costantino; Messina, Alberto; Cucchiara, Rita

"A Browsing and Retrieval System for Broadcast Videos using Scene Detection and Automatic Annotation"

Proceedings of the 2016 ACM on Multimedia Conference,

Amsterdam, The Netherlands,

pp. 733

-734

,

15 - 19 October 2016,

2016

| DOI: 10.1145/2964284.2973825

Conference

|

| 6 |

Cornia, Marcella; Baraldi, Lorenzo; Serra, Giuseppe; Cucchiara, Rita

"Multi-Level Net: a Visual Saliency Prediction Model"

Computer Vision – ECCV 2016 Workshops,

vol. 9914,

Amsterdam, The Netherlands,

pp. 302

-315

,

October 9th, 2016,

2016

| DOI: 10.1007/978-3-319-48881-3_21

Conference

|

Project Info

Staff:

- Rita Cucchiara

- Costantino Grana

- Simone Calderara

- Lorenzo Baraldi

- Guido Borghi

- Marcella Cornia

- Andrea Palazzi