Action and Gesture Recognition for Human Computer Interaction

This research activity handles the problems of people action and gesture recognition. In particular, we are developing a complete framework for Human Computer Interaction (HCI), where custom gestures can be adopted by each user. Continuous gesture recognition, one-shot learning, transfer learning algorithms are taken into account.

HCI is the discipline that studies models and techniques for the interaction between people and computers. Its historical evolution starts in the ‘70s when Command Line Interfaces (CLI) were created. Although these devices are quick, they are difficult to use, because of their mnemonic component, i.e. users have to remember the right and precise command to interact with the computer. In the ‘80s Graphical User Interfaces (GUI) were developed: they are more user friendly than CLI and introduce new devices (like mouse) and metaphors (like window, drag-and-drop, and desktop). Natural User Interfaces (NUI) have been conceived in the ‘90s; they are intuitive and invisible because users do not need any material device to interact with the computer, they just perform natural actions with their body, their natural and innate language.

For these reasons, NUIs require systems that are able to automatically detect and recognize actions and gestures in a video stream. They have recently got prestige thanks to new low cost technologies that allow easily detecting and precisely tracking human body joints in a 3D space.

Methods

Currently we are working with:

- Hidden Markov Models (HMM): HMM have been widely used for action recognition, since they allow to easily model the temporal evolution of a single or a set of numeric features extracted from the data. The selection of the feature set and the related emission probability function are the key issues to be defined. In particular, if the training set is not sufficiently large, a manual or automatic feature selection and reduction is mandatory.

In particular we are studying:- Continuous HMM (with Gaussian Mixture Model): we adopt a Gaussian Mixture Model, which simplifies the learning phase allowing a simultaneous estimation of both the HMM and the Mixtures parameters using the Baum-Welch algorithm, given the numbers of hidden states and Gaussians per state.

- Discrete HMM: the computation of exponential Gaussian terms is time-consuming. Moreover, the Gaussian parameters may lead to degenerate cases when learned from few examples. Even if some practical tricks have been proposed in these cases, overall performances generally decrease when few input examples are provided in the learning stage. For these reasons, we are focusing on Multi Stream Discrete HMMs. For these reasons, it is necessary mapping all continuous observations into discrete values. In addition, to improve the classification capabilities of HMM, we adopted a set of independent and weighted distributions called stream.

- Dynamic Time Warping (DTW): we adopt DTW to compare the similarity between two temporal sequences with different time and execution speed, a very common characteristic when different people execute even the same action. This method calculates the optimal batch between two streams of observations with certain constrictions (for example slope constraints, path weighting, scaling ecc.).

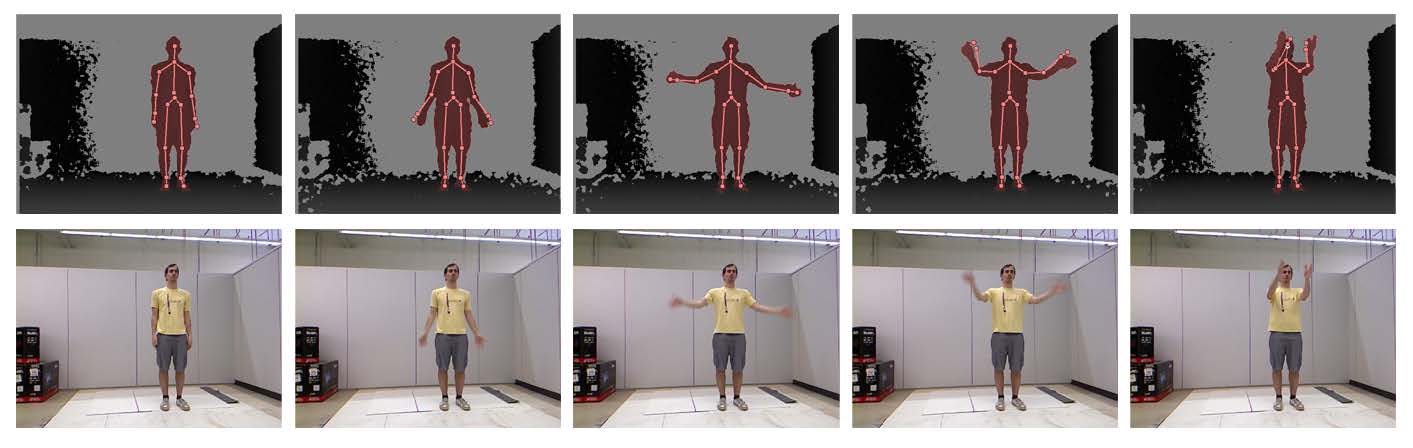

We are focusing our attention on three types of data:

- RGB frames

- Depth maps

- Skeleton

Typically this kind of data are acquired by a RGBD sensor, like Microsoft Kinect.

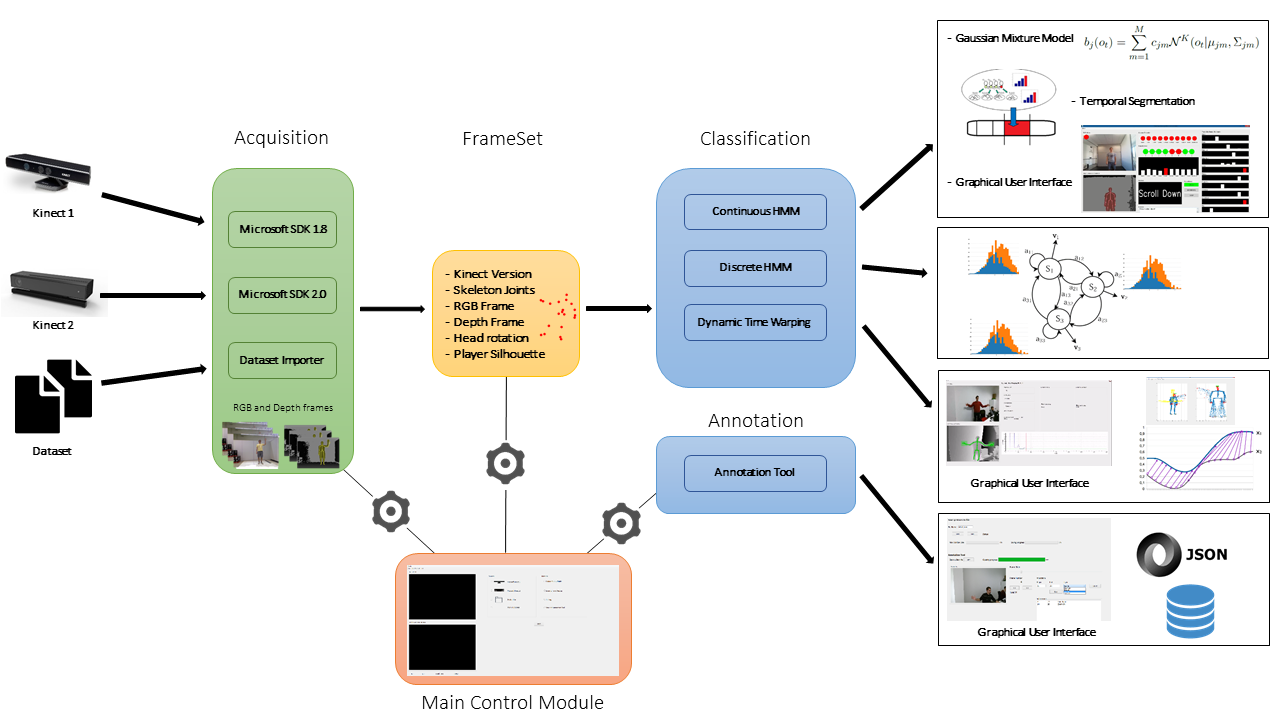

Gesture Recognition Framework

The system that we are developing is called Gesture Recognition Framework (GRF) and is divided in four components:

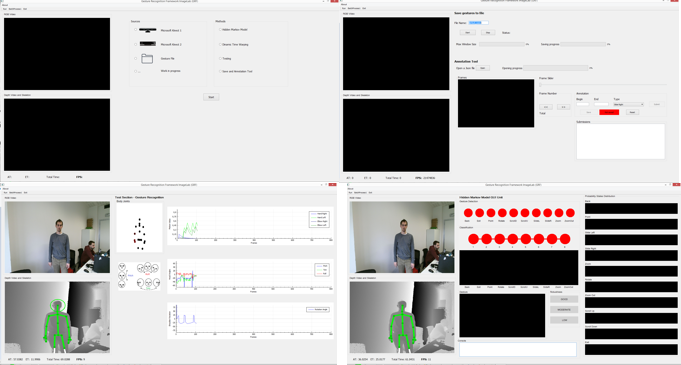

- HMM with GMM for Action Recognition and Segmentation: In this part, we use a novel approach based on the well-known HMM in order to develop a system that is able to overtake the static sliding window approach without strong prior hypothesis (like action duration or typology) and to automatically detect the beginning and the end of an action without unwanted interaction. Microsoft Kinect 1 device is used to get information about the body skeleton of the user and to extract 3D coordinates of body joints; then features are extracted with the Euclidean distance between joint pairs. System’s robustness is obtained in order to avoid possible accidental interactions, gestures performed in the wrong way or unwanted interactions. A graphic user interface is created with Qt libraries to visualize system’s details and to understand system’s dynamic. Experimental data show the system is able to get high scores both in classification and segmentation task. Besides the system is able to automatically understand when a not valid gesture is performed.

- Multiple Stream Discrete HMM for Offline Action Recognition and Online Temporal Segmentation: (work in progress)

- Dynamic Time Warping for Action Recognition: a simple sliding window based system that is able to localize gestures into a continuous stream thanks to 3D skeleton data.

- A tool for video acquisition and annotation useful to acquire and create new datasets.

GRF is able to acquire data thanks to:

- Microsoft Kinect 1: 640x480 Color Camera (up to 1280x960), 320x240 Depth Camera, 9-30 fps, range: 0.4-6 metres, skeleton joints: 20, full skeletons tracked: 2

- Microsoft Kinect 2: 1920x1080 Color Camera, 512x424 Depth Camera, skeleton joints: 26, full skeletons tracked: 6

- Import data from various datasets

and has a Graphical User Interface (build with Qt Libraries) to interact with users.

Datasets

We have used four datasets:

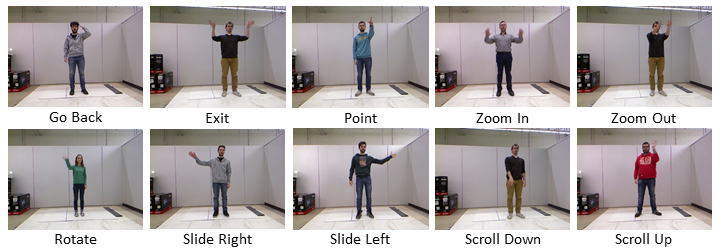

- Kinteract Dataset: we collect a new dataset which has been explicitly designed and created for Human Computer Interaction. 10 gestures types: zoom in, zoom out, scroll up, scroll down, slide left, slide right, rotate, back, ok, exit; dataset contains also a no action, in which subjects stands in front of the camera with a neutral pose. Gestures are performed by 10 subjects (not all subjects perform all actions), for a total of 168 instances. They are acquired by standing in front of a stationary Kinect 1 device and only the upper part of the body (shoulders, elbows, wrists and hands) is involved; each gesture is performed with the same arm by all the subjects (despite they are left or right handed).

HOW TO: main folder contains one folder for each gesture; in each folder there are txt files (named typeAction_subject_instance.txt), that contain coordinates with this format: each row contains 3D coordinates (x, y, z) and a tracking state of each joints; a skeleton has 20 joints and their order is reported here (dataset is acquired with Kinect 1). With n frames, the total number of rows is n*20. There are not file with invalid or null coordinates. RGB frames are not publicly available due to privacy constrictions.

Click here to download Kinteract Dataset (.rar format).

- MSRAction3D Dataset: click here for a detailed description (original Microsoft web site).

Click here for a complete list of deleted action from this dataset, due to the presence of invalid (null) 3D coordinates or heavy joint tracking noise.

- MSRDailyActivity3D Dataset: click here for a detailed description (original Microsoft web site).

- UTKinect-Action Dataset: click here for a detailed description

Publications

| 1 |

Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Fast gesture recognition with Multiple StreamDiscrete HMMs on 3D Skeletons"

Proceedings of the 23rd International Conference on Pattern Recognition,

Cancun,

pp. 997

-1002

,

Dec 4-8, 2016,

2016

| DOI: 10.1109/ICPR.2016.7899766

Conference

|

| 2 |

Vezzani, Roberto; Baltieri, Davide; Cucchiara, Rita

"HMM Based Action Recognition with Projection Histogram Features"

RECOGNIZING PATTERNS IN SIGNALS, SPEECH, IMAGES, AND VIDEOS,

LECTURE NOTES IN COMPUTER SCIENCE,

vol. 6388,

Istanbul, tur,

pp. 286

-293

,

Aug 22, 2010,

2010

| DOI: 10.1007/978-3-642-17711-8_29

Conference

|

| 3 |

Vezzani, Roberto; Piccardi, Massimo; Cucchiara, Rita

"An efficient Bayesian framework for on-line action recognition"

Proceedings of the IEEE International Conference on Image Processing,

Cairo, egy,

pp. 3553

-3556

,

November 7-11, 2009,

2009

| DOI: 10.1109/ICIP.2009.5414340

Conference

|