Fit for Medical Robotics

Abstract: Fit for Medical Robotics aims to revolutionize current rehabilitation and assistive models for people of all ages with reduced or absent motor, sensory, or cognitive functions, by means of novel (bio)robotic and allied digital technologies and of continuum of care paradigms that can take advantage of the novel technologies in all the phases of the rehabilitation process, from the prevention up to the home care in the chronic phase. This will be possible by carefully identifying the unmet needs of patients and healthcare practitioners, and by tackling them with current and novel (bio)robotic/bionic technologies, via multicentric clinical trials jointly conceived by bioengineers, neuroscientists, physiatrists, psychologists and functional/preventive limb surgeons. Such a new continuum-of-care paradigm will start from the prevention and will target all phases of the disease, from acute (bed-side) to chronic (home-rehabilitation) and will contribute to the design of new pre-habilitation protocols and of diagnostic tools for fragile individuals or workers exposed to occupational diseases or repetitive stresses. Fit for Medical Robotics will focus both on already available technologies not yet fully validated, and on emerging technologies or breaking-through ideas to be explored throughout the project. Hence, foundational studies, involving new materials, algorithms, smart sensing and actuation technologies, as well as sustainable power sources, will sought to overcome the limitations of current robotic solutions, which have prevented their massive spread as physical care providers, in order to pave the way to the next generation of biomedical robotic systems. Not less important, the clinical, scientific, and technologic efforts will be matched on the policy, regulatory and organizational sides in order to accelerate the setup of an adequate framework apt to incorporate (in a sustainable manner) current and future technologies and protocols in the healthcare system as well as to sustain the innovation they will bring about.

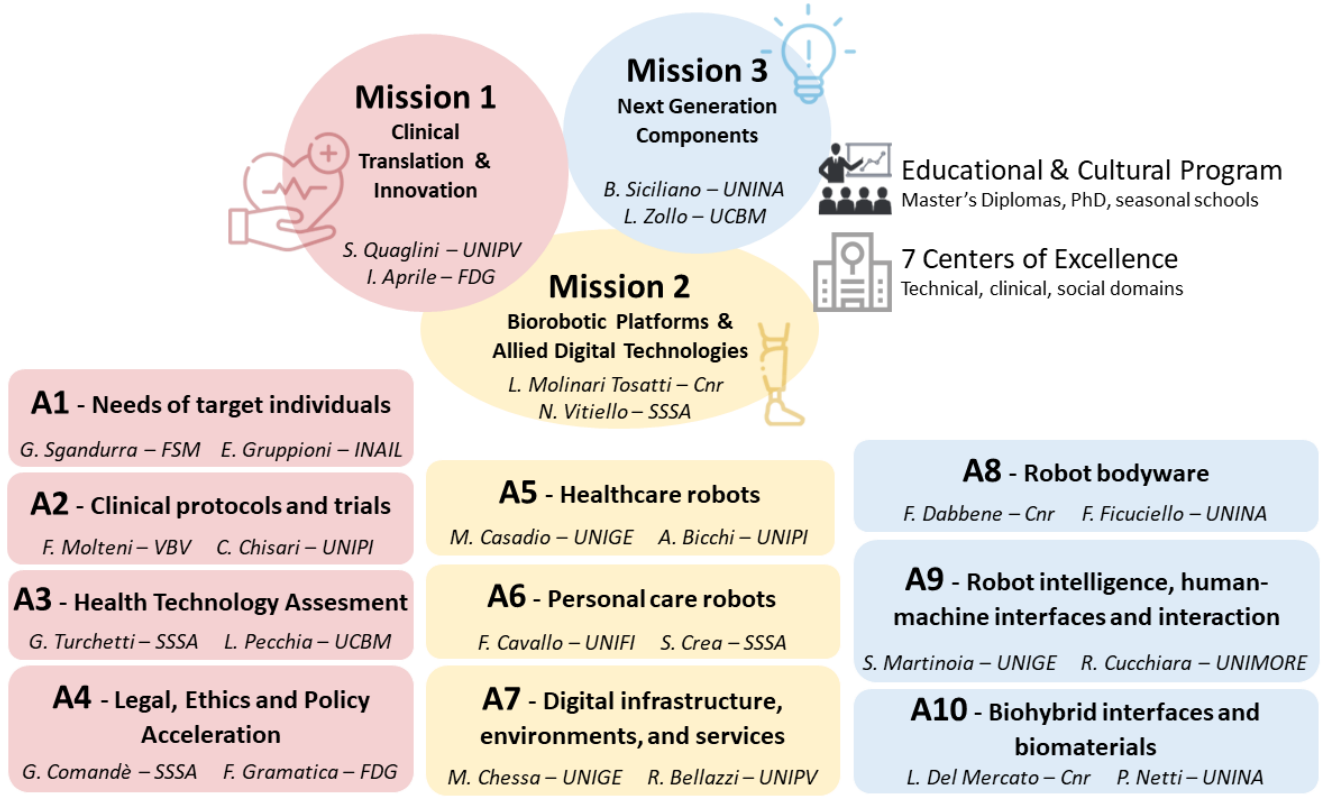

AImageLab is involved in the Activities A8-A9 of the FIT4MEDROB project working actively on a subproject on Human-AI Robot Interaction in Indoor Environments.

The goal of this subproject is to empower embodied agents and robots for healthcare and personal assistance with intelligent behaviours and interaction capabilities.Two complementary approaches will be exploited:

- Top-down techniques for translating human requests and human behaviours into commands for the robot through the development and training of Deep Learning-based policies, Computer Vision, and Natural Language Processing models. Generative-AI Theories will be developed to define robust and sustainable approaches of bidirectional interactions.

- Bottom-up methods based on interaction control to endow the robot with skills for safe physical human-robot interaction. As well, possible new sensors will be defined to enrich the robot's interactivity with humans.

The subproject aims to surpass the current state of the art by empowering robots with intelligent, personalised capabilities, with a strong focus on safe human-robot interaction, personalization, and assistive robotics. This will be done through the development of multi-modal architectures for integrating multiple signal sources regarding humans and environment. The focus will be on indoor environments (e.g., house, hospital, public areas), which are in line with the aims of the FIT4MEDROB project, and also provide a complex and interesting setting. Specifically, the subproject aims to study and develop:

- Embodied navigation algorithms with instructions given by coordinates (reach a point given its coordinates), objects (find and reach an object of the given category), and language (execute an instruction given in Natural Language).

- Extension of the communication scheme between humans and the robot to allow continuous exchange of information (e.g., multiple feedbacks during navigation).

- Human-AI interaction algorithms based on Computer Vision and the integration of multiple modalities (e.g., speech, emotions, text) coming from the human.

- Brain functional mapping by functional Near-Infrared Spectroscopy (fNIRS) to improve human-robot interactions. Cerebral blood flow mapping performed by fNIRS will be converted into actionable signals for the robot coding the user’s neural activity into a sequence of robot commands.

- Personalization of the intelligent behaviour of the robot (e.g., recognize specific/personal objects, unlearn concepts).

- Human-robot physical interaction for rehabilitation purposes based on virtual fixture and adaptive impedance control with a safety guarantee.

- Explainability of the robot behaviour in interaction with humans.

- Safe interaction with the environment by exploiting interaction control techniques.

- Intuitive programming of robot manipulation for assistive applications, based e.g., on kinesthetic teaching and task demonstration.

The subproject will integrate training and development on simulated environments with that on physical robots. In particular, we plan to employ mobile manipulators, that is mobile platforms with manipulation capabilities obtained with one or two arms equipped with suitable end-effectors, like e.g., the TIAGO robot. The development of servicing robots, specific to the devised applications, can be obtained through cascade calls. Moreover, collaborative robots, either available on the market or developed in the FIT4MEDROB project, will be exploited for safely interacting with humans in rehabilitation applications. Significant overlap is also expected with respect to the activities of Mission 1 and 2, and in close collaboration with neuroscientists for emotion recognition and understanding.

The final goal is to develop new AI-based solutions, and intelligent robot systems for a prototype of human-robot interaction in assistive healthcare, for possible experimentation at the end of the project inside houses or in rehabilitative infrastructures.

Publications

| 1 | Bigazzi, Roberto; Baraldi, Lorenzo; Kousik, Shreyas; Cucchiara, Rita; Pavone, Marco "Mapping High-level Semantic Regions in Indoor Environments without Object Recognition" Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), vol. 2024, Yokohama, pp. 7686 -7693 , May 13th-17th, 2024, 2024 | DOI: 10.1109/ICRA57147.2024.10610897 Conference |

| 2 | Rawal, Niyati; Bigazzi, Roberto; Baraldi, Lorenzo; Cucchiara, Rita "AIGeN: An Adversarial Approach for Instruction Generation in VLN" Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, CVPRW 2024, Seattle, pp. 2070 -2080 , 16th-22st June 2024, 2024 | DOI: 10.1109/CVPRW63382.2024.00212 Conference |

| 3 | Barsellotti, Luca; Bigazzi, Roberto; Cornia, Marcella; Baraldi, Lorenzo; Cucchiara, Rita "Personalized Instance-based Navigation Toward User-Specific Objects in Realistic Environments" Proccedings of the 38th Conference on Neural Information Processing Systems, NeurIPS 2024, vol. 37, Vancouver, Canada, December 9-15, 2024, 2024 Conference |

| 4 | Bigazzi, Roberto; Cornia, Marcella; Cascianelli, Silvia; Baraldi, Lorenzo; Cucchiara, Rita "Embodied Agents for Efficient Exploration and Smart Scene Description" Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), vol. 2023-May, London, pp. 6057 -6064 , 29 May - 2 June 2023, 2023 | DOI: 10.1109/ICRA48891.2023.10160668 Conference |

| 5 |

Poppi, Samuele; Rawal, Niyati; Bigazzi, Roberto; Cornia, Marcella; Cascianelli, Silvia; Baraldi, Lorenzo; Cucchiara, Rita

"Towards Explainable Navigation and Recounting"

IMAGE ANALYSIS AND PROCESSING, ICIAP 2023, PT I,

vol. 14233,

Udine, Italy,

pp. 171

-183

,

September 11-15, 2023,

2023

| DOI: 10.1007/978-3-031-43148-7_15

Conference

|

Project Info

Staff:

- Rita Cucchiara

- Costantino Grana

- Roberto Vezzani

- Lorenzo Baraldi

- Roberto Bigazzi

- Elisa Ficarra

- Niyati Rawal