Mercury: a framework for Driver Monitoring and Human Car Interaction

The deep learning-based models and frameworks investigated at AImageLab have been exploited to implement a framework to monitor the driver attention level and to allow the interaction between the driver and the car through the Natural User Interfaces paradigm. The driver attention is a concept that is difficult to define in a univocal way and, also, it has different nuances: for this reason, we focus on the concept of attention understood as the driver's level of fatigue, computed through the perclos measure, and the driver fine and coarse gaze estimation.

The loss of vehicle control is a common problem, due to driving distractions, also linked to driver’s stress, fatigue and poor psycho-physical conditions. Indeed, humans are easily distractible, struggling to keep a constant concentration level and showing signs of drowsiness just after a few hours of driving. Furthermore, the future arrival of (semi-)autonomous driving cars and the necessary transition period, characterized by the coexistence of traditional and autonomous vehicles, is going to increase the already-high interest about driver attention monitoring systems, since for legal, moral and ethical implications driver must be ready to take the control of the (semi-)autonomous car.

Therefore, we propose a Driver Monitoring System, here referred as Mercury, based on Computer Vision and Deep Learning algorithms.

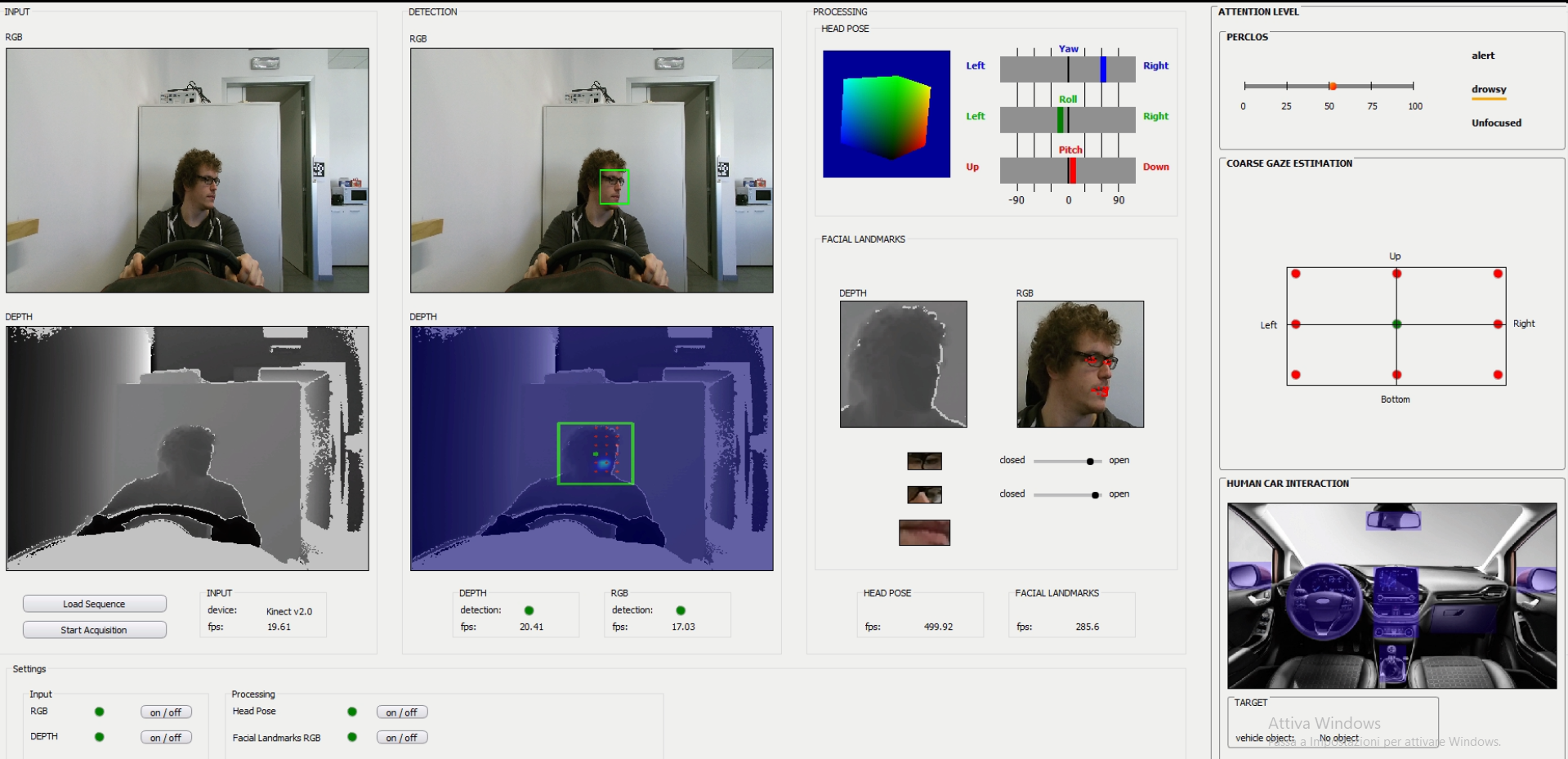

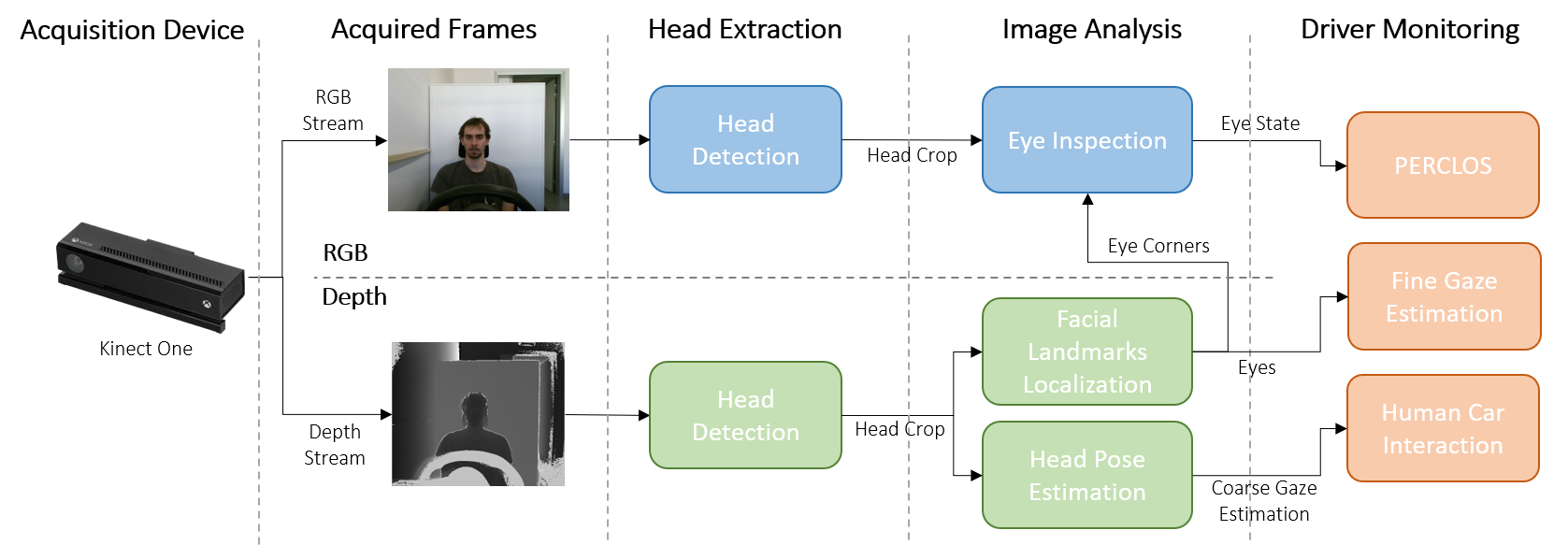

From a technical point of view, Mercury framework is composed of different stages. The whole architecture of the system is represented in the following figure. The system has been set up in a modular way, since it is able to work only with RGB data, depth data or both.

Acquisition Module

- Microsoft Kinect One: although more recent depth sensors are available on the market, with more suitable factor forms for the automotive field, this device has been preferred due to the availability and relative simplicity of the proprietary SDK that is compatible with the Python programming language, as well as the simultaneous presence of an excellent color camera and a depth sensor with a good spatial resolution and a low noise level. Moreover, the minimum range for data acquisition allows its use on the automotive context, since in our case the device is placed in front of the driver, near the car dash-board.

In the following, technical specifications of the device are reported:- Full HD RGB camera: the spatial resolution is 1920×1080. The device is able to acquire up to 30 frame per second;

- Depth sensor: the spatial resolution is 512×424. This sensor provides depth information as a two-dimensional array of pixels, namely depth map. Since each pixel represents the distance in millimeters from the camera, depth images are coded in 16 bits;

- Infrared emitter: this device is based on the Time-of-Flight (ToF) technology and its range starts from 0.5 up to 7 meters;

- Microphone array: in this device is present an array of microphones. Audio data are sampled at 16 kHz and coded with 16 bits. It is used to pick up the voice commands, for the calibration of the peripheral and the suppression of white noise. Microphones are not used in this project.

Head Extraction

- RGB images: Viola&Jones algorithm, provided by the dLib libraries. Despite the presence of more recent deep learning-based algorithms, is still a valid choice for its effectiveness and good speed performance. Moreover, in this regard, we note that many car companies still use derivative versions of the algorithm in question.

- Depth Maps: we have developed own algorithms to perform fast head detection through a Fully Convolutional Network (FCN). Results have been published in two papers (VISAPP and ICPR).

The output of RGB and depth head detection modules consists of the head crops, which is a bounding box containing the driver's face with minimal background portions.

Image Analysis

- Head Pose Estimation: the obtained head crop is used to produce three different inputs for the Head Pose Estimation module. The first input is represented by the raw depth map, while the second is a Motion Image, obtained running the Optical Flow algorithm (Farneback implementation) on a sequence of depth images. The third input is generated by a network called Face-from-Depth (TPAMI), that is able to reconstructs gray-level face images starting from the related depth images. All these three inputs are then processed by a regressive Convolutional Neural Network (CNN) (CVPR) that finally outputs the value of the yaw, pitch and roll 3D angles expressed as continuous values.

- Facial Landmark Localization: the goal of this module is a reliable estimation of the facial landmark coordinates, i.e. salient regions of the face, like eyes, eyebrows, mouth, nose and jawline. Due to the limited spatial resolution of available depth images, we focus on a selection of five facial landmarks: eye pupils, mouth corners and the nose tip. The core of the method is a CNN (ICIAP) that works in regression and receives a stream of depth images as input. The developed system has real-time performance and it is more reliable than state-of- art competitors in presence of poor illumination and light changes, thanks to the use of depth images as input.

- Eye State Analysis: the extraction of the facial landmarks allows obtaining the bounding box of the driver's right and left eyes: these images are then analyzed by a CNN to determine if the eye is open or closed.

Driver Monitoring

- Perclos: the analysis of the attention and the level of fatigue of the driver is done through the indicator called PERCLOS (PERcentage of eyelid CLOSure), introduced in 1994. This measure aims to express the percentage of time in a minute in which the eye remains closed from 80% to 100%. In general, the computation of the PERCLOS measurement yields a numerical value that can be analyzed to determine the driver's fatigue level. Blinking beats, identifiable as almost instantaneous closures of the eye, are excluded in its computation, since only the prolonged and slow closures, usually called droops, are maintained.

The level of driver attention is then classified through three thresholds:- Alert (perclos < 0:3): good level of attention, optimal driver conditions;

- Drowsy (0.3 < PERCLOS < 0.7): first signs of carelessness and fatigue, the driver is not in optimal conditions and that introduces some risks;

- Unfocused (PERCLOS > 0.7): complete lack of attention of the driver who is in poor physical condition or is sleeping;

- Coarse Gaze Estimation: head pose and eye location information are combined to estimate the driver gaze estimation. This information is used to create an interactive system, in which hypothetically the driver can select an object present in the cabin only with the orientation of his face. In particular, the following geometric model has been developed.

Implementation

The Mercury framework has been implemented and tested on a computer equipped with an Intel Core i7-7700K processor, 32 GB of RAM and a Nvidia 1080Ti GPU.

The various components belonging to the deep learning tools have been implemented with the Keras framework with Tensorflow as backend. Finally, a graphic interface has been created through the Qt Libraries and it is de-tailed in the following paragraph.

Being aware that the aforementioned hardware equipment is not suitable for the automotive context, due to reasons related to the costs and power consumption, we have started to implement these systems on embedded boards (IV). The Nvidia TX2 board has been chosen, since it is equipped with an embedded GPU suitable for deep learning-based algorithms.

Publications

| 1 |

Borghi, Guido; Fabbri, Matteo; Vezzani, Roberto; Calderara, Simone; Cucchiara, Rita

"Face-from-Depth for Head Pose Estimation on Depth Images"

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,

vol. 42,

pp. 596

-609

,

2020

| DOI: 10.1109/TPAMI.2018.2885472

Journal

|

| 2 |

Ballotta, Diego; Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Head Detection with Depth Images in the Wild"

Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018),

vol. 5,

Funchal, Portugal,

pp. 56

-63

,

27-29 January 2018,

2018

| DOI: 10.5220/0006541000560063

Conference

|

| 3 |

Venturelli, Marco; Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Deep Head Pose Estimation from Depth Data for In-car Automotive Applications"

Proceedings of the 2nd International Workshop on Understanding Human Activities through 3D Sensors,

vol. 10188,

Cancun (Mexico),

pp. 74

-85

,

Dec 4 , 2016,

2018

| DOI: 10.1007/978-3-319-91863-1_6

Conference

|

| 4 |

Borghi, Guido; Venturelli, Marco; Vezzani, Roberto; Cucchiara, Rita

"POSEidon: Face-from-Depth for Driver Pose Estimation"

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR),

vol. 2017-,

Honolulu, Hawaii,

pp. 5494

-5503

,

July, 22-25, 2017,

2017

| DOI: 10.1109/CVPR.2017.583

Conference

|

| 5 |

Borghi, Guido; Gasparini, Riccardo; Vezzani, Roberto; Cucchiara, Rita

"Embedded Recurrent Network for Head Pose Estimation in Car"

Proceedings of the 2017 IEEE Intelligent Vehicles Symposium,

Redondo Beach CA, USA,

June 11-14,

2017

Conference

|

| 6 |

Frigieri, Elia; Borghi, Guido; Vezzani, Roberto; Cucchiara, Rita

"Fast and Accurate Facial Landmark Localization in Depth Images for In-car Applications"

Proceedings of the 19th International Conference on Image Analysis and Processing,

Catania,

pp. 539

-549

,

11-15 september 2017,

2017

| DOI: 10.1007/978-3-319-68560-1_48

Conference

|